ANOVA#

Introduction#

Analysis of Variance (ANOVA) is a powerful statistical technique used to compare the means of three or more groups. It’s a cornerstone of biostatistics, helping us understand how different factors influence biological phenomena. We use it to analyze everything from the effects of various drugs on patient outcomes to the impact of environmental changes on species populations.

The type of ANOVA we use depends on how our data is structured. One-way ANOVA is used when we have one categorical factor with at least three levels (e.g., comparing the effectiveness of three different fertilizers on plant growth). However, many biological studies involve more complex designs.

For instance, we might want to see how a treatment affects the same individuals over time. This calls for a repeated-measures ANOVA, which accounts for the correlation between measurements taken on the same subject. Or, we might have a study with both between-subject factors (like genotype) and within-subject factors (like time). This is where mixed-design ANOVA comes in, allowing us to analyze the interplay of these different factors.

Regardless of the type of ANOVA, the fundamental principle remains the same: we partition the observed variance in the data to determine if the variation between groups is greater than the variation within groups. This helps us determine if the factor we’re interested in has a statistically significant effect.

In this chapter, we’ll explore the theory behind ANOVA, including the concepts of variance partitioning and the F-statistic. Then, we’ll dive into the practical application of ANOVA in Python, using powerful libraries like Pingouin and statsmodels. We’ll cover one-way and two-way ANOVA, repeated-measures ANOVA, and mixed-design ANOVA, providing us with the tools and knowledge to analyze a wide range of biological datasets.

Mathematical concepts#

Variance#

At its heart, ANOVA is all about analyzing variance. Remind from the earliest chapters about quantifying scatter of continuous data, variance is a statistical measure that quantifies the spread or dispersion of data points around the mean. In simpler terms, it tells us how much the individual data points deviate from the average. A high variance indicates that the data points are spread out over a wide range of values, while a low variance indicates that the data points are clustered closely around the mean.

ANOVA utilizes variance to make inferences about the means of different groups. The core idea is to compare the variance between the groups to the variance within the groups:

Variance between groups: this reflects the differences between the means of the groups. If the means are very different, the between-group variance will be high.

Variance within groups: this reflects the variability of the data points within each group. Even if the group means are different, there will still be some variation among the individuals within each group.

By comparing these two sources of variance, ANOVA can determine if the differences between the group means are statistically significant or simply due to random chance. If the between-group variance is significantly larger than the within-group variance, it suggests that the groups are truly different from each other.

To calculate the variance of a dataset, we follow these steps:

Calculate the mean: sum all the data points and divide by the number of data points.

Calculate the deviations: subtract the mean from each data point.

Square the deviations: square each of the differences calculated in step 2.

Sum the squared deviations: add up all the squared deviations.

Divide by the degrees of freedom: divide the sum of squared deviations by the number of data points minus 1 (this is the degrees of freedom).

The formula for (sample) variance is:

where:

\(x_i\) = each individual data point

\(\bar{x}\) = the mean of the data set

\(n\) = the number of data points

Partitioning the sum of squares#

ANOVA takes the total variability in our data and divides it into different parts, like slicing a pie. This “slicing” is called partitioning the sum of squares. Each slice of the pie represents a source of variation. In one-way ANOVA, we have two main slices:

Between-group variability: this represents how much the group means vary from the overall mean of the data. In essence, it captures the differences between our groups.

Within-group variability: this represents how much the individual data points within each group vary from their respective group means. It captures the inherent variation within each group.

Now, here’s where the variance formula becomes crucial. To quantify these two types of variability, we use a slightly modified version of the variance formula. Instead of dividing by (n-1), we’ll focus on the sum of squared deviations, i.e., the numerator in the variance formula:

Sum of squares total (SST): this represents the total variability in the data. We calculate it by finding the squared difference between each data point and the overall mean of the data, then summing those squared differences. This is represented as \(\text{SS}_\text{total}\).

Sum of squares between (SSB): this represents the variability between the groups. We calculate it by finding the squared difference between each group mean and the overall mean, weighting it by the number of observations in each group, and then summing those weighted squared differences. This is sometimes referred to as the “effect” and is represented as \(\text{SS}_\text{effect}\).

Sum of squares within (SSW): this represents the variability within each group. We calculate it by finding the squared difference between each data point and its group mean, then summing all those squared differences across all groups. This is also known as the “error” and is represented as \(\text{SS}_\text{error}\).

More formally, we can express these sums of squares using the following equations:

where:

\(i=1,\dots,r\) represents the groups (from 1 to r total groups)

\(j=1,\dots,n_i\) represents the observations within each group

\(r \) is the total number of groups

\(n_i\) is the number of observations in the \(i\) th group

\(x_{ij}\) is the jth observation in the ith group

\(\overline{x_i}\) is the mean of the ith group, or “group mean”

\(\overline{x}\) is the overall mean of the data, or “grand mean”

These equations provide a precise way to calculate the different components of variance. SST captures the total variability around the grand mean, SSB captures the variability of group means around the grand mean, and SSW captures the variability of individual data points around their group means.

The beauty of this partitioning is that the total variability is equal to the sum of the between-group variability and the within-group variability:

In fact:

The middle term simplifies to zero, since for each group i, the term \(\sum_j (x_{ij} - \overline{x}_i)\) sums the deviations of the data points from the group mean. This sum is always zero by the definition of the mean, i.e., \(\overline{x}_i = \sum_j (x_{ij})\), and since this sum is zero for each group, the entire middle term becomes zero.

The last term can be simplified by recognizing that \((\overline{x}_i - \overline{x})\) is constant for all data points within group i, i.e., with ragards to \(j\). Therefore, we can move it outside the inner summation. Finally, \(\sum_j 1 = n_i\) because it’s simply counting the number of data points in group i. Therefore:

Putting it all together, we have:

By breaking down the total variability, we can assess the relative contributions of between-group and within-group variation. If the between-group variability (SSB) is a large proportion of the total variability (SST), it suggests that the group means are quite different, and the factor we’re investigating has a strong effect.

This partitioning of the sum of squares is directly related to comparing different models to explain the data, as discussed in an ealier chapter. In one-way ANOVA, we’re essentially comparing two models: a null model that assumes no difference between group means (like a single horizontal line) and an alternative model that allows for differences between group means. SST represents the total variability under the null model. SSW represents the variability remaining after fitting the alternative model, and SSR represents the reduction in variability achieved by using the alternative model. This connects to the concept of R-squared, which is the proportion of total variation explained by the alternative model (SSR/SST).

F-statistic#

Recall from our earlier exploration of the comparison of models that we used the F-statistic to assess the significance of a regression model. The F-statistic isn’t unique to ANOVA; it’s a general tool for comparing models and assessing the amount of variation explained by a particular model.

In the context of ANOVA, we’re essentially comparing two models: a null model that assumes no difference between group means and an alternative model that allows for differences. The F-statistic helps us determine if the alternative model (with group differences) provides a significantly better fit to the data than the null model.

Recall that in the context of comparing models, we calculated the F-statistic as:

where:

MSR is the mean square regression

MSE is the mean square error

SST is the total sum of squares

SSE is the sum of squares error

In ANOVA, we use slightly different terminology, but the underlying concept is the same. We calculate the F-statistic as:

where:

\(\text{MS}_{\text{effect}}\) is the mean square effect (analogous to MSR)

\(\text{MS}_{\text{error}}\) is the mean square error (analogous to MSE)

\(\text{SS}_{\text{effect}}\) is the sum of squares effect (analogous to SSR)

\(\text{SS}_{\text{error}}\) is the sum of squares error (analogous to SSE)

\(r\) is the number of groups

\(N\) is the total number of observations

Essentially, the “effect” in ANOVA corresponds to the “regression” in the regression context. Both represent the improvement in explaining the data achieved by the more complex model.

P value#

The F-statistic itself doesn’t directly tell us whether the observed differences between group means are statistically significant. To make that determination, we need the P value.

The P value is the probability of obtaining an F-statistic as extreme as the one we calculated, assuming that the null hypothesis is true, i.e., assuming there are no real differences between the group means: \(\overline{x_1} = \overline{x_2} = \dots = \overline{x_i}\).

To find the P value, we use the F-distribution. This is a probability distribution that describes the behavior of F-statistics under the null hypothesis. The shape of the F-distribution depends on the degrees of freedom associated with the between-group and within-group variances.

In one-way ANOVA, the F-distribution has:

Degrees of freedom for the numerator: \(r - 1\) (where \(r\) is the number of groups)

Degrees of freedom for the denominator: \(N - r\) (where \(N\) is the total number of observations)

Using these degrees of freedom, we can look up the P value corresponding to our calculated F-statistic in a statistical table or use software to calculate it directly (as we’ll see in the Python examples).

A small P value (typically less than 0.05) indicates that it’s unlikely to observe such an extreme F-statistic if the null hypothesis were true. This provides evidence against the null hypothesis, leading us to reject it and conclude that there’s a statistically significant difference between at least one pair of group means.

On the other hand, a large P value (greater than 0.05) suggests that the observed F-statistic is not unusual under the null hypothesis. In this case, we fail to reject the null hypothesis and conclude that there’s not enough evidence to suggest a difference between the group means.

Effect size#

Eta-squared#

While the F-statistic and P value tell us whether there’s a statistically significant difference between group means, they don’t tell us how large that difference is. To quantify the magnitude of the effect, we use a measure called eta-squared (\(\eta^2\)).

Eta-squared represents the proportion of total variability in the data that is accounted for by the differences between groups. It’s calculated as:

Eta-squared can be interpreted similarly to R-squared in regression. For example, an \(\eta^2\) of 0.10 means that 10% of the total variability in the data is explained by the differences between the groups.

In one-way ANOVA, eta-squared is equivalent to partial eta-squared (\(\eta_p^2\)), which is a more general measure of effect size used in other ANOVA designs. Partial eta-squared is calculated as:

Eta-squared provides valuable information about the practical significance of our findings. A larger eta-squared indicates a stronger effect, meaning that the group differences account for a greater proportion of the overall variability in the data.

While there are no strict cutoffs, general guidelines suggest that:

\(\eta^2\) = 0.01 indicates a small effect

\(\eta^2\) = 0.06 indicates a medium effect

\(\eta^2\) = 0.14 indicates a large effect

Omega-squared#

While eta-squared (\(\eta^2\)) is a commonly used effect size measure in ANOVA, it tends to overestimate the population effect size, especially when the sample size is small. This bias arises because eta-squared is calculated based on the sample sums of squares, which are inherently influenced by sampling variability. To address this bias, we can use omega squared (\(\omega^2\)), which provides a less biased estimate of the population effect size. Omega squared adjusts for the degrees of freedom and the within-group variance, resulting in a more accurate estimate, particularly for smaller samples:

where:

\(\text{SS}_{\text{effect}}\) is the sum of squares between groups

\(\text{MS}_{\text{error}}\) is the mean square error (within groups)

\(\text{SS}_{\text{total}}\) is the total sum of squares

\(r\) is the number of groups

Omega squared, like eta-squared, represents the proportion of variance in the dependent variable that is accounted for by the independent variable (or factor). However, it provides a more accurate estimate, especially when sample sizes are small. It will generally be smaller than eta-squared, reflecting its less biased nature. The difference between the two measures becomes less pronounced as the sample size increases.

While \(\eta^2\) is often reported due to its simplicity and familiarity, it’s generally recommended to use \(\omega^2\) as a more accurate measure of effect size in ANOVA, particularly when dealing with smaller samples.

By considering both the statistical significance (P value) and the effect size (\(\eta^2\) or \(\omega^2\)), we can gain a more complete understanding of the results of our ANOVA analysis.

Calculating ANOVA manually#

Before diving into using dedicated Python libraries for ANOVA, let’s solidify our understanding by manually calculating an ANOVA table and visualizing the F-distribution. This will give us a deeper appreciation for the underlying calculations and how to interpret the results.

Manual calculation of the ANOVA table#

An ANOVA table provides a structured summary of the key calculations involved in ANOVA. Here’s how we can construct it step-by-step:

Organize the data

Calculate the degrees of freedom

Calculate the sums of squares:

Calculate SST / SStotal (total sum of squares)

Calculate SSB / SSeffect (sum of squares between groups)

Calculate SSW / SSerror (sum of squares within groups)

Calculate the mean squares

Calculate the F-statistic

Determine the P value using the

scipy.statsmoduleConstruct the ANOVA table

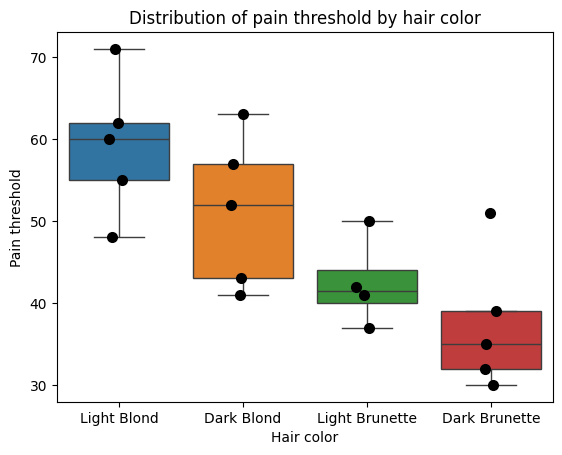

To solidify our understanding of the calculations behind ANOVA, let’s manually compute the sums of squares for the effect (SSB) and error (SSW). We’ll use the anova dataset from the Pingouin library as an example. This dataset provides pain threshold scores for individuals with different hair colors (light blond, dark blond, light brunette, and dark brunette). The original study aimed to investigate whether hair color is related to pain tolerance, as studied by McClave and Dietrich (1991).

import pandas as pd

import pingouin as pg

# Load the 'anova' dataset from pingouin

data_anova = pg.read_dataset('anova')

# Display the first few rows of the DataFrame

data_anova.head()

| Subject | Hair color | Pain threshold | |

|---|---|---|---|

| 0 | 1 | Light Blond | 62 |

| 1 | 2 | Light Blond | 60 |

| 2 | 3 | Light Blond | 71 |

| 3 | 4 | Light Blond | 55 |

| 4 | 5 | Light Blond | 48 |

import seaborn as sns

import matplotlib.pyplot as plt

# Create the boxplot

sns.boxplot(x='Hair color', y='Pain threshold', data=data_anova, hue='Hair color', showfliers=False)

# Overlay the stripplot

sns.stripplot(

x='Hair color', y='Pain threshold', data=data_anova,

color='black', size=8)

# Add plot labels and title

plt.xlabel('Hair color')

plt.ylabel('Pain threshold')

plt.title('Distribution of pain threshold by hair color');

# Parameters of the analysis

r = data_anova['Hair color'].nunique() # Number of groups/conditions

N = len(data_anova) # Number of total values

DF_between = r - 1 # Degrees of freedom for MSeffect

DF_within = N - r # Degrees of freedom for MSerror

print(f"There are {r} different conditions and {N} total values")

print(

f"This leads to {DF_between} and {DF_within} degrees of freedom for the effect (SSB) and error (SSW) mean squares respectively")

There are 4 different conditions and 19 total values

This leads to 3 and 15 degrees of freedom for the effect (SSB) and error (SSW) mean squares respectively

# Calculate the overall mean of 'Pain threshold'

grand_mean = data_anova['Pain threshold'].mean()

print(f"Grand mean = {grand_mean:.2f}")

# Sums of squares

# $\text{SST} = \sum_i \sum_j (x_{ij} - \overline{x})^2$

SST = ((data_anova['Pain threshold'] - grand_mean)**2).sum()

# $\text{SSW} = \sum_i \sum_j (x_{ij} - \overline{x}_i)^2$

SSW = (

data_anova.groupby('Hair color')['Pain threshold']

.transform(lambda x: (x - x.mean())**2)

).sum()

SSB = SST - SSW

print(f"With SST = {SST:.1f} and SSW = {SSW:.1f}, we obtain SSB = {SSB:.1f}")

# Compute SSB from scratch for verification

SSB_scratch = (

data_anova.groupby('Hair color')['Pain threshold']

.apply(lambda x: x.count() * (x.mean() - grand_mean)**2)

).sum()

print(f"SSB computed from scratch = {SSB_scratch:.1f}")

Grand mean = 47.84

With SST = 2362.5 and SSW = 1001.8, we obtain SSB = 1360.7

SSB computed from scratch = 1360.7

We can view comparing three or more means with one-way ANOVA as comparing how well two different models fit the data:

Null model: this model assumes that all populations have the same mean, which is equivalent to the grand mean of the combined data. In essence, this model suggests that there are no real differences between the groups, and any observed differences are due to random chance.

Alternative model: this model allows for the possibility that the population means are not all equal. This means that at least one group has a mean that is different from the others, suggesting that there are genuine differences between the groups.

Hypothesis |

Scatter from |

Sum of squares |

Percentage of variation |

R² |

|---|---|---|---|---|

Null |

Grand mean |

2362.5 |

100 |

|

Alternative |

Group means |

1001.8 |

42.4 |

|

Difference |

1360.7 |

57.6 |

0.576 |

This table summarizes the partitioning of variance in our ANOVA analysis, comparing the variability explained by the grand mean (null hypothesis) to the variability explained by the group means (alternative hypothesis).

The first row represents the null hypothesis, where we assume all data points are scattered around the grand mean. This accounts for 100% of the total variation.

The second row represents the alternative hypothesis, where we consider the variability of data points around their respective group means. This accounts for 42.4% of the total variation.

The third row shows the difference between the two models, representing the variability explained by the differences between group means. This accounts for 57.6% of the total variation and corresponds to an R² of 0.576.

In essence, this table illustrates how ANOVA partitions the total variability and assesses the proportion of variability explained by the grouping factor. By comparing these two models, ANOVA helps us determine which model provides a better explanation of the observed data. If the alternative model fits the data significantly better than the null model, we have evidence to reject the null hypothesis and conclude that there are significant differences between the group means.

# MS

MS_between = SSB / DF_between

MS_within = SSW / DF_within

print(f"MS for SSB = {MS_between:.1f} and for SSW = {MS_within:.1f}")

MS for SSB = 453.6 and for SSW = 66.8

from scipy.stats import f

# F ratio and associated P value

f_ratio = MS_between / MS_within

p_value = f.sf(f_ratio, DF_between, DF_within) # Using the survival function (1 - cdf)

print(f"Finally, with an F ratio = {f_ratio:.4f}, the associated P value = {p_value:.5f}")

Finally, with an F ratio = 6.7914, the associated P value = 0.00411

ANOVA table#

We’ve explored the key components of analysis of variance: sums of squares (SST, SSB, SSW), degrees of freedom, and mean squares (MSeffect, MSerror). These elements come together in a structured way within the ANOVA table. This table provides a framework for comparing a model that assumes no difference between group means (our null hypothesis) to a model that allows for differences between group means.

Here’s the ANOVA table we constructed for our example analyzing the effect of hair color on pain threshold:

Source of variation |

Sum of squares |

DF |

MS |

F-ratio |

P value |

|---|---|---|---|---|---|

Between Groups |

1360.7 |

3 |

453.6 |

6.791 |

0.0041 |

Within Groups |

1001.8 |

15 |

66.8 |

||

Total |

2362.5 |

18 |

The table partitions the total variation (SST) into the variation explained by differences between the groups (SSB) and the unexplained variation within groups (SSW). The degrees of freedom (DF) are shown for each source of variation. The mean squares (MS) are calculated by dividing the sum of squares by the corresponding degrees of freedom. The F-ratio, calculated as MSbetween / MSwithin, compares the explained and unexplained variation, adjusted for their degrees of freedom. Fianlly, the P value helps us determine the statistical significance of the F-ratio.

If the null hypothesis were true (i.e., if there were no differences between the means of the hair color groups), we would expect the two MS values (MSbetween and MSwithin) to be similar, resulting in an F-ratio close to 1.0. However, in our example, the F-ratio is 6.791, suggesting that the differences between groups explain significantly more variation than would be expected by chance. This small P value (0.0041) provides strong evidence against the null hypothesis, leading us to conclude that there are significant differences in pain threshold between at least two of the hair color groups.

ANOVA reconstruction from summary data#

One-way ANOVA can be computed without raw data, so long as we know the mean, sample size, and standard deviation (or standard error) of each group. This is particularly useful in situations where we only have access to aggregated data or when the original dataset is too large to store.

To reconstruct the ANOVA table from summary statistics, we can use the following formulas:

\(\text{SS}_\text{total} = \text{SS}_\text{effect} + \text{SS}_\text{error}\)

\(\text{SS}_\text{effect} = \sum n_i (\overline{x_i} - \overline{x})^2\), where \(n_i\) is the sample size of group \(i\), \(\overline{x_i}\) is the mean of group \(i\), and \(\overline{x}\) is the overall mean

\(\text{SS}_\text{error} = \sum (n_i - 1) s_i^2\), where \(s_i^2\) is the variance of group \(i\)

To find the last formula, we start with the basic definition of SSW: \(\text{SS}_\text{error} = \sum_i \sum_j (x_{ij} - \overline{x}_i)^2\). Recall the formula for the variance of a sample from the very early chapters, where \(s^2 = \frac{\sum_{j}(x_j - \bar{x})^2}{n - 1}\). Notice the similarity between the numerator of the variance formula and the formula for SSW.

If we rearrange the variance formula to isolate the sum of squares, we get \(\sum_{j}(x_j - \bar{x})^2 = (n - 1) s^2\). Then, we apply this to each group \(i\) in the ANOVA to get \(\sum_{j=1}^{n_i}(x_{ij} - \overline{x}_i)^2 = (n_i - 1) s_i^2\).

Finally, we sum the sum of squares for each group \(\text{SSW} = \text{SS}_\text{error} = \sum_i \sum_{j=1}^{n_i}(x_{ij} - \overline{x}_i)^2 = \sum_i (n_i - 1) s_i^2\).

Once we have the sums of squares, we can calculate the mean squares (MS) by dividing the sum of squares by the corresponding degrees of freedom. The F-statistic is then computed as the ratio of the mean squares (MSeffect / MSerror), and the P value can be obtained using the F-distribution.

Consider the ‘Pain threshold’ variable from the ANOVA dataset and the following group summary statistics.

data_anova.groupby('Hair color').agg(['mean', 'std', 'count']).loc[:, 'Pain threshold']

| mean | std | count | |

|---|---|---|---|

| Hair color | |||

| Dark Blond | 51.2 | 9.284396 | 5 |

| Dark Brunette | 37.4 | 8.324662 | 5 |

| Light Blond | 59.2 | 8.526429 | 5 |

| Light Brunette | 42.5 | 5.446712 | 4 |

Calculating SSB#

The SSB or SSeffect quantifies the variability between the group means and the overall mean. It’s calculated as the sum of the squared differences between each group mean (\(\overline{x}_i\)) and the grand mean (\(\overline{x}\)), weighted by the number of data points in each group (\(n_i\)).

In this example, the grand mean (\(\overline{x}\)) is 47.842, as calculated previously.

Group |

Mean |

Grand mean |

Count |

Calculation of SSB |

|---|---|---|---|---|

Dark Blond |

51.2 |

47.842 |

5 |

5 * (51.2 - 47.842)**2 = 56.38 |

Dark Brunette |

37.4 |

47.842 |

5 |

5 * (37.4 - 47.842)**2 = 545.18 |

Light Blond |

59.2 |

47.842 |

5 |

5 * (59.2 - 47.842)**2 = 645.02 |

Light Brunette |

42.5 |

47.842 |

4 |

4 * (42.5 - 47.842)**2 = 114.15 |

Sum |

SSB = 1360.725 |

We calculate the squared difference between each group mean and the grand mean, multiply it by the group size, and sum these values across all groups to obtain SSB = 1360.725.

Calculating SSW#

SSW or SSerror, measures the variability within each group. It’s calculated as the sum of the squared differences between each data point and its group mean. When we don’t have access to the individual data points, we can still calculate SSW using the standard deviation of each group.

Group |

Standard deviation |

Count |

Calculation of SSW |

|---|---|---|---|

Dark Blond |

9.284 |

5 |

(5 - 1) * 9.284**2 = 344.77 |

Dark Brunette |

8.325 |

5 |

(5 - 1) * 8.325**2 = 277.22 |

Light Blond |

8.526 |

5 |

(5 - 1) * 8.526**2 = 290.77 |

Light Brunette |

5.447 |

4 |

(4 - 1) * 5.447**2 = 89.01 |

Sum |

SSW = 1001.77 |

We calculate the SSW for each group using the formula \((n_i - 1) s_i^2\) and then sum these values to obtain the total SSW, which, in this case, is 1001.77. This matches the SSB and SSW values we calculated previously using the raw data, to the decimal approximation, confirming the accuracy of our approach. We can automatize this approach using Python.

# Calculate the overall mean of 'Pain threshold'

grand_mean = data_anova['Pain threshold'].mean()

print(f"Grand mean = {grand_mean:.4f}")

# Group by 'Hair color' and calculate relevant statistics

calc_table = data_anova.groupby('Hair color')['Pain threshold'].agg(['count', 'mean', 'std'])

# Calculate SSeffect and SSerror

calc_table['SSB'] = calc_table['count'] * (calc_table['mean'] - grand_mean)**2

calc_table['SSW'] = (calc_table['count'] - 1) * calc_table['std']**2

# Add a sum row

calc_table.loc['sum'] = calc_table.sum()

# Display the table

pg.print_table(calc_table.reset_index(), floatfmt='.4f', tablefmt='simple')

print(

f"The values to be used in the ANOVA table are: "

f"SSB = {calc_table.loc['sum','SSB']:.1f}, and "

f"SSW = {calc_table.loc['sum','SSW']:.1f}"

)

Grand mean = 47.8421

Hair color count mean std SSB SSW

-------------- ------- -------- ------- --------- ---------

Dark Blond 5.0000 51.2000 9.2844 56.3773 344.8000

Dark Brunette 5.0000 37.4000 8.3247 545.1878 277.2000

Light Blond 5.0000 59.2000 8.5264 645.0089 290.8000

Light Brunette 4.0000 42.5000 5.4467 114.1524 89.0000

sum 19.0000 190.3000 31.5822 1360.7263 1001.8000

The values to be used in the ANOVA table are: SSB = 1360.7, and SSW = 1001.8

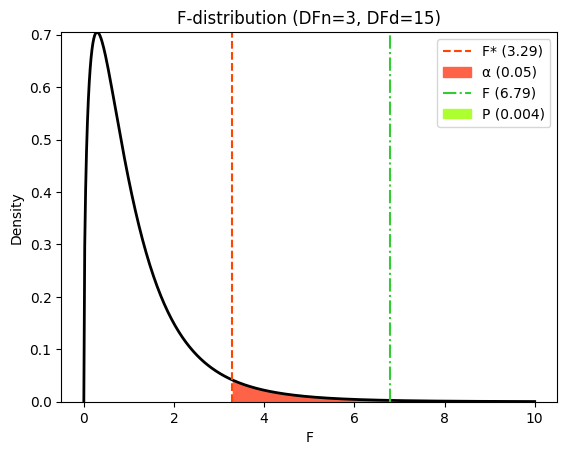

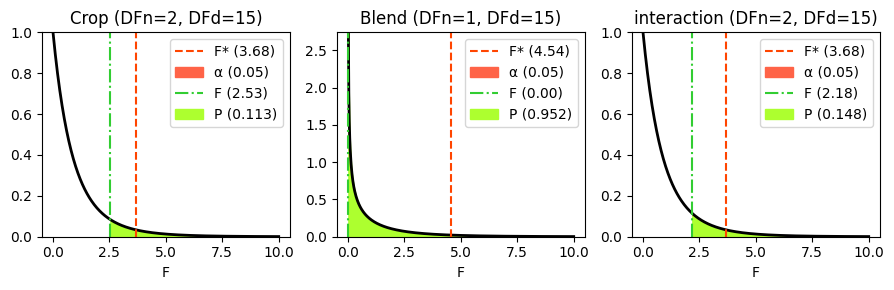

Visualizing the F-distribution and critical values#

Visualizing the F-distribution can help us understand the meaning of the F-statistic and the P value. It also allows us to see how the critical F-value relates to our calculated F-statistic and the significance level (\(\alpha\)).

We can use the scipy.stats module in Python to plot the F-distribution and shade the areas corresponding to the P value and the \(\alpha\) level, as we did in earlier chapters on comparing models and in nonlinear regression. This visualization provides a clear picture of:

The shape of the F-distribution for our specific degrees of freedom.

How extreme our observed F-statistic is under the null hypothesis.

The location of the critical F-value and its relationship to alpha.

The relationship between the p-value and the area under the curve beyond the F-statistic.

import numpy as np

# Significance level (alpha)

α = 0.05

# Calculate critical F-value

f_crit = f(dfn=DF_between, dfd=DF_within).ppf(1 - α)

# Generate x values for plotting

x_f = np.linspace(0, 10, 500) # Adjusted range for better visualization

hx_f = f.pdf(x_f, DF_between, DF_within)

# Create the plot

plt.plot(x_f, hx_f, lw=2, color='black')

# Critical value

plt.axvline(

x=f_crit,

color='orangered',

linestyle='--',

label=f"F* ({f_crit:.2f})")

# Alpha area

plt.fill_between(

x_f[x_f >= f_crit],

hx_f[x_f >= f_crit],

color='tomato',

label=f"α ({α})")

# F-statistic

plt.axvline(

x=f_ratio,

color='limegreen',

linestyle='-.',

label=f"F ({f_ratio:.2f})")

# P value area

plt.fill_between(

x_f[x_f >= f_ratio],

hx_f[x_f >= f_ratio],

color='greenyellow',

label=f"P ({p_value:.3f})")

plt.xlabel("F")

plt.ylabel('Density')

plt.title(f"F-distribution (DFn={DF_between}, DFd={DF_within})")

plt.margins(x=0.05, y=0)

plt.legend();

Practical application with Python#

Now that we’ve established the core mathematical concepts behind ANOVA, let’s move on to the practical application. In this section, we’ll explore how to perform ANOVA using Python, leveraging powerful libraries like Pingouin and statsmodels. We’ll start with one-way ANOVA and then delve into more complex designs.

One-way ANOVA with Pingouin#

As we have seen at multiple occasions in the previous chapters, the Pingouin library is a powerful and user-friendly Python package specifically designed for statistical analysis. It also provides a wide range of functions for conducting ANOVA, including one-way ANOVA, repeated-measures ANOVA, and mixed-design ANOVA.

Let’s perform one-way ANOVA using Pingouin.

Having loaded the anova dataset for the manual calculations, we are now ready to use it for one-way ANOVA.

# data_anova = pg.read_dataset('anova')

# data_anova.head()

Normality test#

Before conducting ANOVA, it’s essential to check if the data within each group follows a normal distribution. This normality assumption is crucial because ANOVA is based on the assumption that the data are normally distributed within each group.

To test for normality, we can use various statistical tests and graphical methods, as discussed extensively throughout this book. One common approach is the Shapiro-Wilk test, which assesses whether the data deviates significantly from a normal distribution. Additionally, visual tools like histograms and Q-Q plots can provide insights into the data’s distribution and help identify potential departures from normality.

print(pg.normality(data=data_anova, dv='Pain threshold', group='Hair color'))

W pval normal

Hair color

Light Blond 0.991032 0.983181 True

Dark Blond 0.939790 0.664457 True

Light Brunette 0.930607 0.597974 True

Dark Brunette 0.883214 0.324129 True

Here, it is satisfied for all groups. However, if normality were violated in other cases, transformations (e.g., logarithmic or square root) could be applied to attempt to normalize the data before performing ANOVA. If normalization is not possible, the Kruskal-Wallis test provides a non-parametric alternative.

Homoscedasticity#

Another crucial assumption of ANOVA is homogeneity of variances, also known as homoscedasticity. This assumption states that the variances of the groups being compared should be roughly equal.

To test for homogeneity of variances, we can use Levene’s test or Bartlett’s test, discussed previously. These tests assess whether there are significant differences in the variances of the groups.

print(pg.homoscedasticity(data=data_anova, dv='Pain threshold', group='Hair color'))

W pval equal_var

levene 0.392743 0.760016 True

Here, this assumption is also met. If, however, variances were unequal in other analyses, Welch’s ANOVA (discussed later in the chapter) which does not assume equal variances, would be employed. Transformations could also be considered in an attempt to achieve homoscedasticity.

Conducting the ANOVA#

Since the data meet the assumptions of normality and homoscedasticity, we can proceed with standard ANOVA. The anova function in Pingouin provides a convenient way to perform this analysis.

By setting the detailed=True argument, we can obtain a more comprehensive output that includes additional information beyond the basic ANOVA results. This detailed output can provide valuable insights into the data and the effects of the independent variable.

aov = pg.anova(

data=data_anova,

dv='Pain threshold',

between='Hair color',

detailed=True

)

print(aov.round(3)) # Round the output to 3 decimal places for better readability

Source SS DF MS F p-unc np2

0 Hair color 1360.726 3 453.575 6.791 0.004 0.576

1 Within 1001.800 15 66.787 NaN NaN NaN

The output is a DataFrame containing the sums of squares (between groups = 1360.726, within groups = 1001.8), along with the corresponding degrees of freedom and mean squares. The F-statistic and its associated P value are also provided. These values are consistent with the manual calculations performed earlier. Notably, the DataFrame also includes effect size measures.

While Pingouin’s anova function can be used independently (pg.anova), it’s often more convenient to call it directly on a pandas DataFrame (df.anova()) when the data is already in that format. or a more compact output, set detailed=False. The effsize argument controls the effect size measure; by default, partial eta-squared is reported, but setting effsize='n2' will return standard eta-squared.

print(

data_anova.anova(

dv='Pain threshold',

between='Hair color',

detailed=False,

effsize='n2'

) # type: ignore

)

Source ddof1 ddof2 F p-unc n2

0 Hair color 3 15 6.791407 0.004114 0.575962

Calculating \(\omega^2\) requires manual application of the relevant formula (derived from the definition given previously).

formulas = [

lambda: (SSB - (r-1) * MS_within) / (SST + MS_within), # original formula defined in the introduction

lambda: DF_between * (MS_between - MS_within) / (SST + MS_within),

lambda: (r-1) * (f_ratio-1) / ((r-1) * (f_ratio-1) + N)

]

for i, formula in enumerate(formulas):

print(f"ω² (formula {i+1}) = {formula():.8f}")

ω² (formula 1) = 0.47765205

ω² (formula 2) = 0.47765205

ω² (formula 3) = 0.47765205

One-way ANOVA with scipy.stats#

While libraries like Pingouin offer convenient functions for conducting ANOVA, it’s also beneficial to understand how to perform ANOVA using the core statistical functions available in scipy.stats. The f_oneway function in scipy.stats provides a straightforward way to conduct one-way ANOVA. The function takes one parameter, which is a list of sample groups. Each sample group represents the measurements for a particular group or condition in the experiment.

from scipy.stats import f_oneway

# Perform one-way ANOVA using scipy.stats

hair_colors = data_anova['Hair color'].unique()

groups = [data_anova['Pain threshold'][data_anova['Hair color'] == color] for color in hair_colors]

F_scipy, p_scipy = f_oneway(*groups)

# similar to

# F, p = f_oneway(

# data[data['Hair color'] == 'Dark Blond']['Pain threshold'],

# data[data['Hair color'] == 'Dark Brunette']['Pain threshold'],

# data[data['Hair color'] == 'Light Blond']['Pain threshold'],

# data[data['Hair color'] == 'Light Brunette']['Pain threshold'],

# )

print(f"F statistic = {F_scipy:.3f} with P value from the F distribution = {p_scipy:.5f}")

F statistic = 6.791 with P value from the F distribution = 0.00411

One-way ANOVA using statsmodels#

We can also perform ANOVA using the statsmodels library. It provides a comprehensive set of tools for statistical modeling, including a robust framework for ANOVA. While Pingouin focuses on ease of use and scipy.stats offers core statistical functions, statsmodels gives us more flexibility in specifying and fitting different ANOVA models.

One of the key advantages of statsmodels is its explicit link to regression analysis. As we discussed in previous chapters, ANOVA can be viewed as a special case of linear regression where the predictor variables are categorical. statsmodels allows us to leverage this connection by formulating ANOVA models using its formula API, which utilizes the Patsy library for formula parsing.

When using the statsmodels formula API, we need to ensure that variable names adhere to standard Python conventions. This means avoiding special characters like periods (.) or hyphens (-). If our dataset contains such characters in variable names, we can use Patsy’s Q() function to quote these names, ensuring they are interpreted correctly.

Additionally, the C() function in statsmodels is essential for specifying categorical variables, as it handles the grouping structure correctly. However, in our case, since the ‘Hair color’ variable already contains string values, statsmodels will automatically treat it as a categorical variable.

from statsmodels.formula.api import ols

formula = "Q('Pain threshold') ~ Q('Hair color')"

# Prepare the one-way ANOVA model using statsmodels

model_anova_statsmodels = ols(formula=formula, data=data_anova)

# Fit the model

results_anova_statsmodels = model_anova_statsmodels.fit()

# Print the model summary

print(results_anova_statsmodels.summary2())

Results: Ordinary least squares

===================================================================================

Model: OLS Adj. R-squared: 0.491

Dependent Variable: Q('Pain threshold') AIC: 137.2568

Date: 2025-02-18 09:28 BIC: 141.0346

No. Observations: 19 Log-Likelihood: -64.628

Df Model: 3 F-statistic: 6.791

Df Residuals: 15 Prob (F-statistic): 0.00411

R-squared: 0.576 Scale: 66.787

-----------------------------------------------------------------------------------

Coef. Std.Err. t P>|t| [0.025 0.975]

-----------------------------------------------------------------------------------

Intercept 51.2000 3.6548 14.0091 0.0000 43.4100 58.9900

Q('Hair color')[T.Dark Brunette] -13.8000 5.1686 -2.6700 0.0175 -24.8167 -2.7833

Q('Hair color')[T.Light Blond] 8.0000 5.1686 1.5478 0.1425 -3.0167 19.0167

Q('Hair color')[T.Light Brunette] -8.7000 5.4822 -1.5870 0.1334 -20.3849 2.9849

-----------------------------------------------------------------------------------

Omnibus: 1.137 Durbin-Watson: 2.021

Prob(Omnibus): 0.566 Jarque-Bera (JB): 0.979

Skew: 0.358 Prob(JB): 0.613

Kurtosis: 2.150 Condition No.: 5

===================================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly

specified.

c:\Users\Sébastien\Documents\data_science\biostatistics\intuitive_biostatistics\.env\Lib\site-packages\scipy\stats\_axis_nan_policy.py:418: UserWarning: `kurtosistest` p-value may be inaccurate with fewer than 20 observations; only n=19 observations were given.

return hypotest_fun_in(*args, **kwds)

Since our primary focus is on the ANOVA results and not the detailed OLS regression output, although we can determine the degrees of freedom, the F-statistics and the associated P value.

We can use the anova_lm function in statsmodels to directly obtain the ANOVA table. This function takes the fitted OLS model as an argument and returns the ANOVA table, which summarizes the key information about the sources of variation, degrees of freedom, sums of squares, mean squares, F-statistic, and P value.

from statsmodels.stats.anova import anova_lm

# Obtain the ANOVA table

anova_table_statsmodels = anova_lm(results_anova_statsmodels, typ=1)

print(anova_table_statsmodels.round(3)) # Print the rounded ANOVA table

df sum_sq mean_sq F PR(>F)

Q('Hair color') 3.0 1360.726 453.575 6.791 0.004

Residual 15.0 1001.800 66.787 NaN NaN

While we obtained similar results using Pingouin and scipy.stats, statsmodels offers greater flexibility for more complex ANOVA designs, such as factorial ANOVA and ANCOVA, and allows us to specify models using R-like formulas. Additionally, statsmodels provides a deeper connection to regression analysis, enabling us to explore the relationships between variables in more detail.

Repeated measures ANOVA#

Repeated measures ANOVA (rmANOVA) is used when we have measurements that are repeatedly taken on the same subjects or entities. This design is common in many research fields, including biology, where we might track the growth of plants over time, measure the response of patients to a drug at multiple intervals, or observe the behavior of animals under different conditions.

Here are some scenarios where rmANOVA is appropriate:

Measurements made repeatedly for each subject: we might measure the blood pressure of patients before, during, and after treatment.

Subjects recruited as matched sets: we might recruit pairs of twins and assign one twin to a treatment group and the other to a control group.

Experiment run several times: we might conduct an experiment multiple times, each time with different subjects, but under the same conditions.

More generally, we should use a repeated measures test whenever we expect the measurements within a subject (or matched set) to be more similar to each other than to measurements from other subjects.

One-way rmANOVA can be viewed as an extension of the paired-samples t-test, but for comparing the means of three or more levels of a within-subjects variable. rmANOVA designs can be more complex, for example, two-way rmANOVA is used to evaluate simultaneously the effect of two within-subject factors on a continuous outcome variable. In this section, we focus on one-way rmANOVA.

Partitioning variance in rmANOVA#

In one-way repeated measures ANOVA, the total variance is partitioned into three components:

Sum of squares between (SSB) or SSeffect: this is the variance explained by the factor of interest (e.g., time or treatment)

Sum of squares subjects (SSS) or SSsubjects; this is the variance attributed to individual differences between subjects. This is calculated as:

\[\text{SSS} = \text{SS}_\text{subjects} = r \sum_s (\overline{x}_s - \overline{x})^2\]where \(r\) is the number of repeated measurements, \(\overline{x}_s\) is the mean for subject s across all measurements, and \(\overline{x}\) is the grand mean.

Sum of suares within (SSW) or SSerror: this is the unexplained or residual variance, after accounting for the effect of the within-subjects factor and the individual differences between subjects.

While the concept of partitioning variance is similar to standard ANOVA, the formulas for SSeffect and SSerror in repeated measures ANOVA are more complex and need to account for the within-subject correlations. We won’t delve into the exact formulas here, as they involve matrix operations.

However, we can still compute the total sum of squares (SST) using the universal formula \(\text{SST} = \text{SS}_\text{total} = \sum_i \sum_j (x_{ij} - \overline{x})^2\). The sum of squares for the effect (SSB or SSeffect) is determined on a group basis similar to standard ANOVA. We know that the total variation is partitioned as follows:

or, using abbreviations \(\text{SST} = \text{SSB} + \text{SSS} + \text{SSW}\). This partitioning allows us to calculate the remaining sum of squares (SSW or SSerror) by subtracting SSS and SSB from SST, as we demonstrated in the previous calculations.

Calculating the F-statistic#

The F-statistic in rmANOVA is calculated as:

Here, r is the number of repeated measurements (or levels of the factor) and n is the number of subjects.

The key difference lies in the denominator of the F-ratio. In standard ANOVA, the error degrees of freedom are calculated as \(N - r\), while in rmANOVA, they are calculated as \((n - 1)(r - 1)\). This difference reflects the fact that in rmANOVA, we are accounting for the individual differences between subjects, which reduces the error variance and can lead to increased power in detecting significant effects.

The P value can then be calculated using an F-distribution with \((r - 1)\) and \((n - 1)(r - 1)\) degrees of freedom.

Advantages of rmANOVA#

Repeated measures ANOVA offers several advantages over traditional one-way ANOVA:

Increased power: by accounting for individual differences between subjects, rmANOVA can be more powerful in detecting significant effects.

Reduced error variance: the SSsubjects term captures some of the variability that would otherwise be included in the error term, leading to a smaller error variance and increased power.

Efficiency: fewer subjects are needed compared to an independent groups design to achieve the same level of power.

Calculating rmANOVA Manually#

As we did for one-way ANOVA, we’ll now manually calculate the steps involved in repeated measures ANOVA. Let’s create a dataset where we measure the blood pressure of 15 patients before, during, and after treatment. This will allow us to assess how blood pressure changes over the course of the treatment using rmANOVA.

# Create the DataFrame

data_rmanova = pd.DataFrame({

'before': [165, 155, 138, 150, 149, 135, 145, 170, 138, 144, 165, 139, 141, 149, 135],

'during': [145, 139, 141, 145, 155, 138, 150, 166, 143, 145, 155, 165, 139, 141, 137],

'after': [140, 133, 140, 145, 149, 125, 142, 160, 140, 142, 133, 140, 141, 140, 133]

})

# Add the case number as index

data_rmanova.index.name = 'case'

# Display the DataFrame

data_rmanova.head()

| before | during | after | |

|---|---|---|---|

| case | |||

| 0 | 165 | 145 | 140 |

| 1 | 155 | 139 | 133 |

| 2 | 138 | 141 | 140 |

| 3 | 150 | 145 | 145 |

| 4 | 149 | 155 | 149 |

When working with complex datasets for repeated measures ANOVA, it’s important to be mindful of potential issues like missing values and duplicated data. Different statistical software and packages might handle these issues differently, so it’s crucial to understand their specific data handling procedures.

For this demonstration, we’ll use a simplified dataset to focus on the core concepts of rmANOVA and the manual calculation of the sums of squares. However, we need to transform the dataset into a “long” format, where each row represents a single observation with a corresponding time point and blood pressure value. This format is often more suitable for certain types of analyses or visualizations.

# Melt the DataFrame into long format

# after converting the index ('Case' numbers) into a regular column

data_rmanova_long = data_rmanova.reset_index().melt(

id_vars='case', # Keep 'Case' as identifier

var_name='time', # Name of the new column for time points

value_name='blood_pressure', # Name of the new column for blood pressure values

)

data_rmanova_long.head()

| case | time | blood_pressure | |

|---|---|---|---|

| 0 | 0 | before | 165 |

| 1 | 1 | before | 155 |

| 2 | 2 | before | 138 |

| 3 | 3 | before | 150 |

| 4 | 4 | before | 149 |

pd.set_option('future.no_silent_downcasting', True)

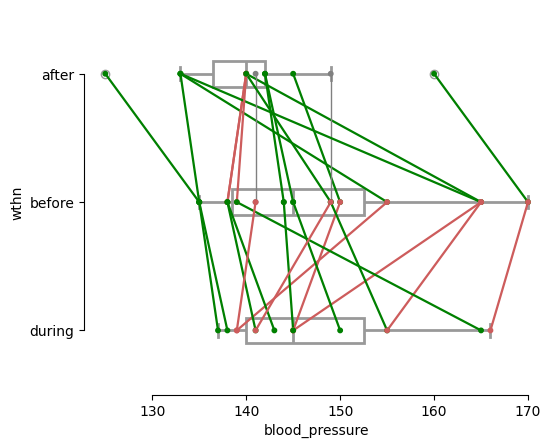

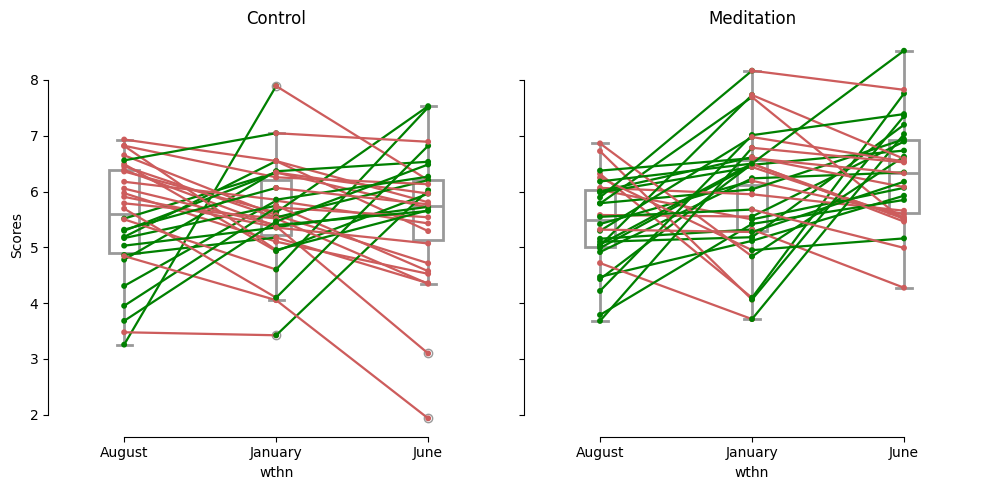

plt.figure(figsize=(6, 5))

# Create the paired plot

pg.plot_paired(

data=data_rmanova_long,

dv='blood_pressure',

within='time',

subject='case',

boxplot=True,

orient='h',

# boxplot_in_front=False, # fix submitted via a PR

boxplot_kwargs={'color': 'white', 'linewidth': 2, 'zorder': 1},

)

sns.despine(trim=True); # not default in Pingouin anymore

The following table provides a structured overview of the calculations and components involved in rmANOVA, making it easier to understand and interpret the results:

Source of Variation |

Degrees of freedom |

Sum of squares |

Mean square |

F-ratio |

|---|---|---|---|---|

Treatment/Time point |

\(\text{DF}_\text{B} = r - 1\) |

SSB |

MSeffect = SSB / DFB |

MSeffect / MSerror |

Subjects |

\(\text{DF}_\text{S} = n - 1\) |

SSS |

||

Residuals (error) |

\(\text{DF}_\text{W} = (n-1)(r-1)\) |

SSW |

MSerror = SSW / DFW |

|

Total |

\(\text{DF}_\text{T} = nr - 1\) |

SST |

By manually calculating these values, we gain a deeper understanding of the underlying calculations and how the ANOVA table is constructed.

# Parameters of the analysis

r = len(data_rmanova_long['time'].unique()) # Number of repeated measurements (time points)

n = len(data_rmanova_long['case'].unique()) # Number of subjects

DF_effect = r - 1 # Degrees of freedom for the effect

DF_subjects = n - 1 # Degrees of freedom for subjects

DF_error = (n - 1) * (r - 1) # Degrees of freedom for error

print(f"There are {r} repeated measurements and {n} subjects")

print(f"This leads to {DF_effect}, {DF_subjects}, and {DF_error} degrees of freedom for the effect, subjects, and error, respectively")

There are 3 repeated measurements and 15 subjects

This leads to 2, 14, and 28 degrees of freedom for the effect, subjects, and error, respectively

# Calculate the overall mean of 'blood_pressure'

grand_mean = data_rmanova_long['blood_pressure'].mean()

print(f"Grand mean = {grand_mean:.2f}")

# Sums of squares

# SST (total sum of squares)

SST = ((data_rmanova_long['blood_pressure'] - grand_mean)**2).sum()

# SSS (sum of squares for subjects)

SSS = (

data_rmanova_long.groupby('case')['blood_pressure']

.apply(lambda x: (x.count()) * ((x.mean() - grand_mean)**2))

).sum()

# SSB (sum of squares between groups)

SSB = (

data_rmanova_long.groupby(['case', 'time'])['blood_pressure']

.mean() # Calculate the mean for each Subject-Time combination

.groupby('time') # Group by Time and calculate SSB

.apply(lambda x: x.count() * (x.mean() - grand_mean)**2)

).sum()

# SSW (sum of squares within subjects)

SSW = SST - SSS - SSB

print(f"With SST = {SST:.3f}, SSW = {SSW:.3f}, and SSB = {SSB:.3f}, we obtain SSS = {SSS:.1f}")

Grand mean = 145.00

With SST = 4452.000, SSW = 1351.733, and SSB = 524.933, we obtain SSS = 2575.3

# Calculate Mean Squares

MS_effect = SSB / DF_effect

MS_error = SSW / DF_error

print(f"MS for effect = {MS_effect:.4f}, and for error = {MS_error:.4f}")

MS for effect = 262.4667, and for error = 48.2762

# Calculate F-ratio and associated p-value

f_ratio = MS_effect / MS_error # Use the appropriate mean squares

p_value = f.sf(f_ratio, DF_effect, DF_error) # Use the correct degrees of freedom

print(f"Finally, with an F ratio = {f_ratio:.4f}, the associated P value = {p_value:.5f}")

Finally, with an F ratio = 5.4368, the associated P value = 0.01012

We’ve explored the key components of repeated measures analysis of variance: sums of squares (SST, SSB, SSS, SSW), degrees of freedom, and mean squares (MSeffect, MSsubjects, MSerror). These elements come together in a structured way within the rmANOVA table. This table provides a framework for comparing a model that assumes no difference between the repeated measurements (our null hypothesis) to a model that allows for differences.

Here’s the rmANOVA table we constructed for our example analyzing the effect of time on blood pressure:

Source of variation |

Sum of squares |

DF |

MS |

F-ratio |

P value |

|---|---|---|---|---|---|

Effect/Time |

524.93 |

2 |

262.47 |

5.4368 |

0.01012 |

Subjects |

2575.3 |

14 |

|||

Error |

1351.73 |

28 |

48.276 |

||

Total |

4452 |

44 |

The table partitions the total variation (SST) into the variation explained by differences between the time points (SSB or SSeffect), the variation between subjects (SSS or SSsubjects), and the unexplained variation within subjects (SSW or SSerror). The degrees of freedom (DF) are shown for each source of variation. The mean squares (MS) are calculated by dividing the sum of squares by the corresponding degrees of freedom. The F-ratio, calculated as MSeffect / MSerror, compares the explained and unexplained variation, adjusted for their degrees of freedom. Finally, the P value helps us determine the statistical significance of the F-ratio.

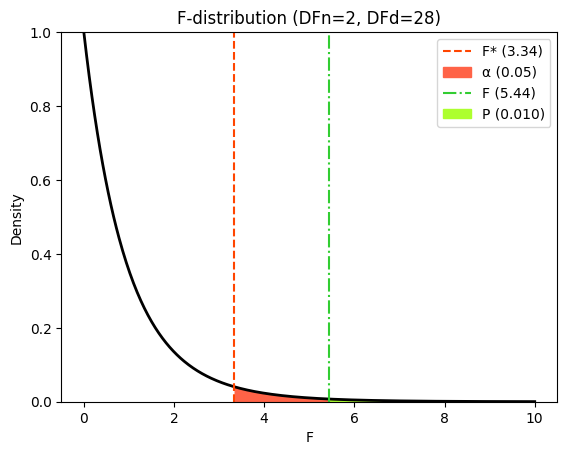

# Significance level (alpha)

alpha = 0.05

# Calculate critical F-value

f_crit = f(dfn=DF_effect, dfd=DF_error).ppf(1 - alpha)

# Generate x values for plotting

x_f = np.linspace(0, 10, 500) # Adjusted range for better visualization

hx_f = f.pdf(x_f, DF_effect, DF_error)

# Create the plot

plt.plot(x_f, hx_f, lw=2, color='black')

# Critical value

plt.axvline(

x=f_crit,

color='orangered',

linestyle='--',

label=f"F* ({f_crit:.2f})")

# Alpha area

plt.fill_between(

x_f[x_f >= f_crit],

hx_f[x_f >= f_crit],

color='tomato',

label=f"α ({alpha})")

# F-statistic

plt.axvline(

x=f_ratio,

color='limegreen',

linestyle='-.',

label=f"F ({f_ratio:.2f})")

# P value area

plt.fill_between(

x_f[x_f >= f_ratio],

hx_f[x_f >= f_ratio],

color='greenyellow',

label=f"P ({p_value:.3f})")

plt.xlabel("F")

plt.ylabel('Density')

plt.title(f"F-distribution (DFn={DF_effect}, DFd={DF_error})")

plt.margins(x=0.05, y=0)

plt.legend();

If the null hypothesis were true (i.e., if there were no differences between the means of the blood pressure measurements at different time points), we would expect the MSeffect and MSerror values to be similar, resulting in an F-ratio close to 1.0. However, in our example, the F-ratio is 5.437, suggesting that the differences between time points explain significantly more variation than would be expected by chance. This small P value (0.010) provides strong evidence against the null hypothesis, leading us to conclude that there are significant differences in blood pressure between at least two of the time points.

Repeated measures ANOVA using Python#

Now that we have a better understanding of the underlying principles of rmANOVA, we’ll explore how to conduct this analysis using Python. We’ll leverage two powerful libraries, Pingouin and statsmodels, to perform the calculations and interpret the results.

We’ll use the rm_anova function in Pingouin and the AnovaRM function in statsmodels to streamline the analysis.

rmANOVA using Pingouin#

Before we start the rmANOVA analysis, we should check some important assumptions:

Normality: rmANOVA assumes that the residuals (the differences between the observed values and the predicted values) are normally distributed.

Homoscedasticity: rmANOVA assumes that the variances of the differences between all pairs of within-subjects conditions are equal.

We’ve discussed these assumptions in the context of standard ANOVA, and they apply to rmANOVA as well.

Another important assumption in rmANOVA is sphericity. This assumption states that the variances of the differences between all possible pairs of within-subjects conditions are equal. We can test this assumption using Mauchly’s test of sphericity.

Violation of the sphericity assumption can lead to an inflated Type I error rate (false positives) and an invalid F-value. If the P value from Mauchly’s test exceeds 0.05, we can conclude that the data meets the assumption of sphericity.

# Test for sphericity

spher_results = pg.sphericity(

dv='blood_pressure',

within='time',

subject='case',

data=data_rmanova_long

)

# Print the sphericity test results

print(spher_results)

SpherResults(spher=True, W=0.8533042981018584, chi2=2.0623077334263207, dof=2, pval=0.35659525969062833)

In our example, the sphericity assumption is met, as indicated by a Mauchly’s W value of 0.853, a χ² statistic of 2.06, and a P value of 0.357, which is significantly greater than 0.05. This means we can proceed with the rmANOVA analysis without concerns about violating the sphericity assumption.

However, it’s worth noting that in situations where the sphericity assumption is violated, corrections like the Greenhouse-Geisser or Huynh-Feldt correction can be applied. These corrections adjust the degrees of freedom to account for the violation of sphericity and provide a more accurate p-value.

# Perform rmANOVA with automatic sphericity correction

aov_rm = pg.rm_anova(

dv='blood_pressure',

within='time',

subject='case',

data=data_rmanova_long,

detailed=True,

effsize="ng2", # Use generalized eta-squared as effect size

correction='auto' # Automatically apply sphericity correction if needed

)

print(aov_rm.round(4))

Source SS DF MS F p-unc ng2 eps

0 time 524.9333 2 262.4667 5.4368 0.0101 0.1179 0.8721

1 Error 1351.7333 28 48.2762 NaN NaN NaN NaN

The results indicate a statistically significant effect of ‘time’ on ‘blood_pressure’ with \(F(2, 28) = 5.4368\), \(P = 0.0101\), and \(\eta^2_g = 0.1179\). This means that there is a significant difference in the ‘blood_pressure’ ratings across the different ‘time’ points. The generalized eta-squared value of 0.1179 suggests that approximately 11.8% of the variance in ‘blood_pressure’ is explained by the ‘time’ factor.

Repeated measures ANOVA using statsmodels#

We can also perform repeated measures ANOVA (rmANOVA) using the statsmodels library, which offers more flexibility for specifying and fitting different ANOVA models, especially for complex designs.

We cannot directly use OLS for rmANOVA because it doesn’t account for the within-subject correlations that are inherent in repeated measures designs. Using ols would be like treating the repeated measurements as independent observations, which would violate the assumptions of the model and lead to inaccurate results. Therefore, for rmANOVA, it’s more appropriate to use specialized functions or classes like AnovaRM in statsmodels or rm_anova in Pingouin. These functions are specifically designed to handle the within-subject correlations and provide accurate results for repeated measures designs.

from statsmodels.stats.anova import AnovaRM

# Perform rmANOVA using AnovaRM

model_anova_rm_statsmodels = AnovaRM(

data=data_rmanova_long,

depvar='blood_pressure',

subject='case',

within=['time']

)

# results_anova_rm_statsmodels = model_anova_rm_statsmodels.fit()

# # Print the ANOVA table

# print(results_anova_rm_statsmodels.anova_table)

print(model_anova_rm_statsmodels.fit())

Anova

==================================

F Value Num DF Den DF Pr > F

----------------------------------

time 5.4368 2.0000 28.0000 0.0101

==================================

Non-parametric methods#

In previous chapters, we explored non-parametric alternatives to t-tests and correlation analysis. Similarly, when the assumptions of ANOVA (or rmANOVA) are not met, such as normality or homogeneity of variances, we can turn to non-parametric methods. These methods offer more flexibility and are less sensitive to the underlying distribution of the data.

We’ll focus on how to perform these tests in Python using the SciPy and Pingouin libraries and interpret the results. While we won’t delve into the mathematical details of these tests to keep the chapter concise, we’ll highlight their key advantages and limitations.

Kruskal-Wallis test#

The Kruskal-Wallis test is a non-parametric alternative to the one-way ANOVA. It is used to compare the medians of three or more groups when the assumptions of ANOVA, such as normality and homogeneity of variances, are not met.

Here’s how the Kruskal-Wallis test works:

Rank the data: all the data points from all groups are combined and ranked from lowest to highest, regardless of their group membership.

Calculate the rank sum for each group: the ranks of the data points within each group are summed to obtain the rank sum for that group.

Calculate the test statistic (H): the H statistic is calculated based on the rank sums and the sample sizes of the groups. It measures the variability between the rank sums, indicating how different the group medians are.

Determine the P value: the P value is calculated using the chi-squared distribution with degrees of freedom equal to the number of groups minus 1. It represents the probability of obtaining an H statistic as extreme as the one calculated, assuming that the null hypothesis is true (i.e., all group medians are equal).

Kruskal-Wallis test using Pingouin#

We can perform the Kruskal-Wallis test using the Pingouin library, which offers a dedicated function called kruskal. This function provides a more user-friendly interface and returns a comprehensive output that includes the test statistic, P value, and degrees of freedom.

# Perform the Kruskal-Wallis test using Pingouin

print(pg.kruskal(data=data_anova, dv='Pain threshold', between='Hair color'))

Source ddof1 H p-unc

Kruskal Hair color 3 10.58863 0.014172

The Kruskal-Wallis H statistic measures the overall difference between the group medians. A larger H statistic indicates a greater difference between the groups. In this case, H = 10.58863. Since the P value (0.014172) is less than the conventional significance level of 0.05, we reject the null hypothesis. This means that there is a statistically significant difference between the medians of at least two of the hair color groups in terms of their pain thresholds.

The Kruskal-Wallis test does not rely on the assumption of normality or homogeneity of variances, making it more robust for data that does not meet these assumptions. It can be used for ordinal data, where the data points represent ranks or ordered categories. But it can also be used with small sample sizes, like in the current example, where the normality assumption is generally difficult to assess.

However, it may be less powerful than ANOVA when the normality and homoscedasticity assumptions are met. It only tests for differences between medians, not means, and while it doesn’t assume normality, it still assumes that the groups have similar shapes of distributions.

Kruskal-Wallis test using SciPy#

We can also use the scipy.stats module to easily compute the Kruskal-Wallis test statistic and its associated P value. This function is easy to use and provides a straightforward way to perform the test.

from scipy.stats import kruskal

# Perform one-way ANOVA using scipy.stats

hair_colors = data_anova['Hair color'].unique()

groups = [

data_anova['Pain threshold'][data_anova['Hair color'] == color] for color in hair_colors

]

# Perform the Kruskal-Wallis test

kruskal(*groups)

KruskalResult(statistic=10.588630377524153, pvalue=0.014171563303136805)

Friedman’s test#

Friedman’s test is a non-parametric alternative to repeated measures one-way ANOVA. It is used to compare the medians of three or more repeated measurements within subjects when the assumptions of rmANOVA, such as normality and sphericity, are not met. Here is how Friedman’s test works:

Rank the data: for each subject, the repeated measurements are ranked from lowest to highest.

Calculate the rank sum for each group: the ranks for each within-subject condition (e.g., time point, treatment) are summed across all subjects.

Calculate the test statistic (Q): the Q statistic is calculated based on the rank sums and the sample sizes. It measures the variability between the rank sums, indicating how different the group medians are.

Determine the P value: the P value is calculated using the chi-squared distribution with degrees of freedom equal to the number of within-subject conditions minus 1. It represents the probability of obtaining a Q statistic as extreme as the one calculated, assuming that the null hypothesis is true (i.e., all group medians are equal).

Like the Kruskal-Wallis test, Friedman’s test does not rely on the assumption of normality or sphericity, making it more robust for data that does not meet these assumptions. It can be also used for ordinal data, where the data points represent ranks or ordered categories, and it can be used with small sample sizes, where the normality assumption is difficult to assess.

Just like the Kruskal-Wallis test, the Friedman’s test has some limitations. It may be less powerful than rmANOVA when the normality and sphericity assumptions are met. Furtheremoire, it only tests for differences between medians, not means. And while it doesn’t assume normality, it still assumes that the distributions of the within-subject conditions have similar shapes.

Friedman’s test using Pingouin#

We can also perform Friedman’s test using Pingouin’s friedman function, just as easily as we did for the Kruskal-Wallis test, rmANOVA, and standard ANOVA.

# Perform Friedman's test

print(

pg.friedman(

data=data_rmanova_long,

dv='blood_pressure',

within='time',

subject='case'

)

)

Source W ddof1 Q p-unc

Friedman time 0.330994 2 9.929825 0.006979

The Friedman test typically uses a Q statistic, which asymptotically follows a chi-squared distribution, for hypothesis testing. However, the chi-squared test can be overly conservative for small sample sizes and repeated measures. As an alternative, we can use an F test, which has better properties for smaller samples and behaves like a permutation test with lower computational cost.

# Perform Friedman's test with F-test

print(

pg.friedman(

data=data_rmanova_long,

dv='blood_pressure',

within='time',

subject='case',

method='f' # Use the F-test

)

)

Source W ddof1 ddof2 F p-unc

Friedman time 0.330994 1.866667 26.133333 6.926573 0.004503

Using both the chi-squared and F-test methods in the Friedman test, we find that the P value is far below 0.01. This confirms that there is a statistically significant difference between the medians of at least two of the repeated measurements.

Friedman’s test using SciPy#

Finally, we can use the scipy.stats module to obtain the results and P value from Friedman’s test.

from scipy.stats import friedmanchisquare

# Extract the data for each time point

before = data_rmanova['before']

during = data_rmanova['during']

after = data_rmanova['after']

# Perform Friedman's test

friedmanchisquare(before, during, after)

FriedmanchisquareResult(statistic=9.929824561403514, pvalue=0.00697856283688688)

Two-way ANOVA#

Example of two-way ANOVA#

Two-way ANOVA is a statistical technique used to analyze the effects of two independent variables on a dependent variable. It’s also known as two-factor ANOVA.

In a two-way ANOVA, the data are divided into two ways because each data point is classified according to two factors. For example, imagine a study where participants are randomly assigned to two different treatment groups (active or inactive) and then measured at two different time points (short duration or long duration). In this case, each data point would be categorized by both the treatment group and the time point.

Two-way ANOVA simultaneously tests three null hypotheses:

No interaction between the two factors: this means that the effect of one factor (e.g., treatment) is the same across all levels of the other factor (e.g., duration). In other words, there is no interaction between treatment and duration.

No main effect of the first factor: this means that the population means are identical across all levels of the first factor (e.g., treatment), regardless of the level of the second factor (e.g., duration).

No main effect of the second factor: this means that the population means are identical across all levels of the second factor (e.g., duration), regardless of the level of the first factor (e.g., treatment).

In this section, we’ll delve into the manual calculation of two-way ANOVA using another Pingouin’s dataset, exploring the impact of different fertilizer formulations on crop yields. As detailed elsewhere, the study investigates a new fertilizer designed to increase crop yields. The makers of the fertilizer want to identify the most effective formulation (‘Blend’) for various ‘Crop’, including wheat, corn, soybeans, and rice. They test two different blends on a sample of five plots for each of the four crop types.

Our goal is to manually calculate the two-way ANOVA to understand the effects of the fertilizer blend and crop type on the yield, as well as their potential interaction. This hands-on approach will provide a deeper understanding of the underlying calculations and principles of two-way ANOVA.

# Load the 'anova2' dataset

data_two_way_anova = pg.read_dataset('anova2')

# Display a sample of the DataFrame

data_two_way_anova.sample(7)

| Ss | Blend | Crop | Yield | |

|---|---|---|---|---|

| 12 | 13 | Blend Y | Wheat | 135 |

| 8 | 9 | Blend X | Soy | 166 |

| 6 | 7 | Blend X | Corn | 174 |

| 14 | 15 | Blend Y | Wheat | 176 |

| 22 | 23 | Blend Y | Soy | 159 |

| 17 | 18 | Blend Y | Corn | 132 |

| 21 | 22 | Blend Y | Soy | 145 |

The data is currently in long format, but we can also represent it in a different way using unstack.

data_two_way_anova.set_index(['Crop', 'Blend', 'Ss']).unstack(0) ## 'Ss' is the unique identifier

| Yield | ||||

|---|---|---|---|---|

| Crop | Corn | Soy | Wheat | |

| Blend | Ss | |||

| Blend X | 1 | NaN | NaN | 123.0 |

| 2 | NaN | NaN | 156.0 | |

| 3 | NaN | NaN | 112.0 | |

| 4 | NaN | NaN | 100.0 | |

| 5 | 128.0 | NaN | NaN | |

| 6 | 150.0 | NaN | NaN | |

| 7 | 174.0 | NaN | NaN | |

| 8 | 116.0 | NaN | NaN | |

| 9 | NaN | 166.0 | NaN | |

| 10 | NaN | 178.0 | NaN | |

| 11 | NaN | 187.0 | NaN | |

| 12 | NaN | 153.0 | NaN | |

| Blend Y | 13 | NaN | NaN | 135.0 |

| 14 | NaN | NaN | 130.0 | |

| 15 | NaN | NaN | 176.0 | |

| 16 | NaN | NaN | 120.0 | |

| 17 | 175.0 | NaN | NaN | |

| 18 | 132.0 | NaN | NaN | |

| 19 | 120.0 | NaN | NaN | |

| 20 | 187.0 | NaN | NaN | |

| 21 | NaN | 140.0 | NaN | |

| 22 | NaN | 145.0 | NaN | |

| 23 | NaN | 159.0 | NaN | |

| 24 | NaN | 131.0 | NaN | |

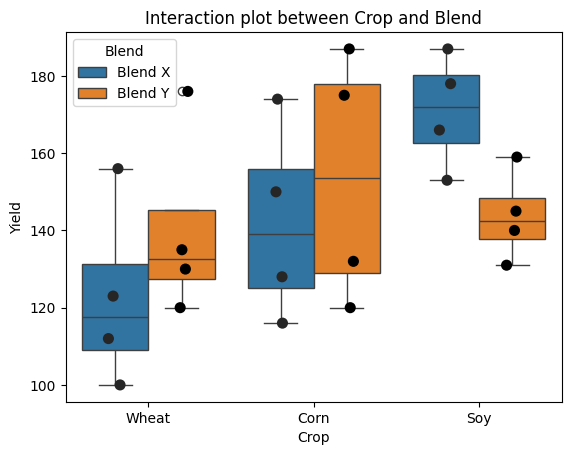

# Create the boxplot

sns.boxplot(

x='Crop',

y='Yield',

data=data_two_way_anova,

hue='Blend',

)

# Overlay the stripplot

sns.stripplot(

x='Crop', y='Yield', data=data_two_way_anova,

hue='Blend', dodge=True, # Separate points for each 'Blend' within 'Crop'

palette='dark:black', size=8, legend=False)

plt.title('Interaction plot between Crop and Blend');

How two-way ANOVA works#

Two-way ANOVA involves partitioning the total variation in the dependent variable into four components:

Interaction effect \(\text{SS}_\text{AXB}\): this component measures the extent to which the effect of one factor depends on the level of the other factor.

Between-rows variation \(\text{SS}_\text{A}\): this component measures the variation between the different levels of the first factor (e.g., the difference between the mean scores of the active and inactive treatment groups).

Between-columns variation \(\text{SS}_\text{B}\): this component measures the variation between the different levels of the second factor (e.g., the difference between the mean scores for the short and long duration groups).

Residuals or within-cells variation SSW or \(\text{SS}_\text{within}\): this component measures the variation within each cell of the design (i.e., within each combination of the two factors).

The total variation SST or SS

Similar to repeated measures ANOVA, the formulas for SSA, SSB, SSAB, and SSW in two-way ANOVA are more complex than in standard one-way ANOVA. They need to account for the effects of both factors and their interaction. We won’t delve into the exact formulas here, as they can be quite involved.

However, we can still compute the total sum of squares (SST) using the universal formula:

where:

\(x_{ijk}\) is the individual data point for the \(k\) th observation in the \(j\) th level of Factor B and the \(i\) th level of Factor A.

\(\bar{x}\) is the grand mean of all the data.

Then, the sum of squares for the first factor / factor A can be writen as:

where:

\(m\) is the number of observations per cell (assuming equal cell sizes).

\(c\) is the number of levels of Factor B, or columns in a \(r \times c\) structure table.

\(\overline{x}_{i}\) is the mean of the \(i\) th level of Factor A, across all levels of Factor B, i.e., the mean of \(\{x_{ijk}: 1 \le j \le c, 1 \le k \le m\}\).

Important note - If the sample sizes \(m\) for the different combinations of factors (cells) are unequal, we have an unbalanced design. In such cases, the traditional approach for calculating sums of squares might not be appropriate. Instead, we can use a regression-based approach, e.g., using statsmodels, to perform the two-way ANOVA, as described elsewhere. This approach is more flexible and can handle unbalanced designs effectively. Pingouin can take care of unbalanced designs and will automatically analyze the data via statsmodels (discussed later in the chapter).

Similarly, the sum of squares for the second factor / factor B equals:

where:

\(r\) is the number of levels of Factor A, or rows in a \(r \times c\) structure table.

\(\overline{x}_{j}\) is the mean of the \(j\) th level of Factor B, across all levels of Factor A, i.e., the mean of \(\{x_{ijk}: 1 \le j \le r, 1 \le k \le m\}\).

Finally, the sum of squares for the interaction equals:

where:

\(\overline{x}_{ij}\) is the mean of the cell corresponding to the \(i\) th level of Factor A and the \(j\) th level of Factor B.

The formula of the sum of squares error (SSW, SSwithin or SSerror) is:

This partitioning allows us to calculate it by subtracting all the effect sums of squares from SST, as we demonstrated in the previous calculations.

Manual calculation of two-way ANOVA#

To test the null hypotheses, we calculate the F-ratio for each effect by dividing the mean square (SS divided by degrees of freedom) for the effect by the mean square for the within-cells variation. The F-ratio represents the ratio of the explained variance to the unexplained variance. The associated P value indicates the probability of obtaining an F-ratio as extreme or more extreme than the one observed, assuming the null hypothesis is true.

The table below outlines the partitioning of variance in a two-way ANOVA, including the main effects of each factor, their interaction, and the within-cell error. It also shows the corresponding degrees of freedom, mean squares, and F-ratios used to test the significance of each effect.

Source of variation |

Sum of squares |

Degrees of freedom |

Mean square |

F-ratio |