Simple linear regression#

Introduction#

In the previous chapter, we explored the concept of correlation, which measures the strength and direction of the linear relationship between two variables. Now, we’ll delve into simple linear regression, a powerful technique that allows us to go beyond merely describing the relationship to actually modeling it.

One way to think about linear regression is that it fits the “best line” through a scatter plot of data points. This line, often called the regression line, captures the essence of the linear relationship between the variables. Imagine drawing a line through a cloud of points - linear regression finds the line that minimizes the overall distance between the points and the line itself.

But there’s more to it than just drawing a line. Linear regression also provides a model that represents the relationship mathematically. So, another way to look at linear regression is that it fits this simple model to the data, aiming to determine the most likely values of the parameters that define the model. These estimated parameters provide valuable insights into the relationship between the variables.

In this chapter, we’ll explore the key concepts of simple linear regression, including:

Estimating the regression coefficients: we’ll learn how to calculate the best-fitting line using the method of ordinary least squares (OLS) in Python.

Assessing model fit: we’ll revisit R-squared and introduce other metrics to evaluate how well the model captures the data.

Assumptions and diagnostics: we’ll briefly recap the key assumptions of linear regression and learn how to diagnose potential violations.

Inference and uncertainty: we’ll explore confidence intervals and hypothesis testing to quantify the uncertainty associated with the estimated coefficients.

Making predictions: we’ll see how to use the fitted model to predict values of the dependent variable for new values of the independent variable.

Advanced techniques: we’ll touch upon topics like regularization and maximum likelihood estimation (MLE) to enhance model fitting.

Fitting model to data#

Theory and definitions#

In simple linear regression, we aim to model the relationship between a response variable (\(y\)) and a covariate (\(x\)). Our measurements of these variables are not perfect and contain some inherent noise (\(\epsilon\)). We can express this relationship mathematically as:

where \(f(x)\) is the regression function that describes the relationship between \(x\) and \(y\). This equation essentially states that the observed response is a combination of a systematic component and a random component.

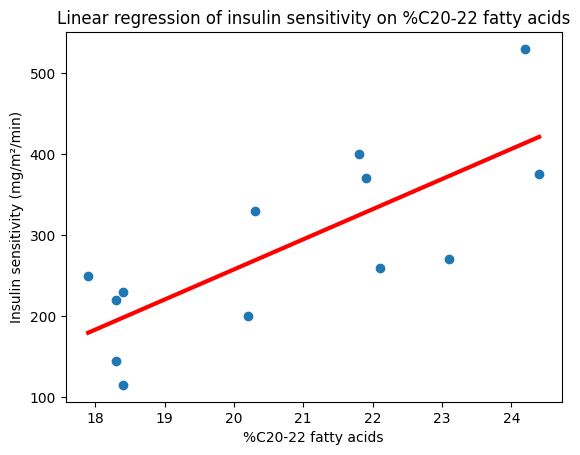

In this chapter we focus on linear combination, a specific type of regression function. For example, we can model insulin sensitivity (the dependent variable) as a function of the percentage of C20-22 fatty acids (the independent variable). This simple linear regression model can be written as:

where:

\(y_i\) represents the insulin sensitivity response variable for the \(i\)-th individual

\(x_i\) represents the percentage of C20-22 fatty acids covariate for the \(i\)-th individual

\(\beta_0\) is the intercept, representing the value of \(y\) when \(x\) is zero

\(\beta_1\) is the slope of the line, representing the change in \(y\) for a one-unit change in \(x\)

\(\epsilon_i\) is the random error for the \(i\)-th individual

The intercept (\(\beta_0\)) and slope (\(\beta_1\)) are the parameters of the model, and our goal is to estimate their true values from the data. We assume that the errors (\(\epsilon_i\)) are independent and follow a Gaussian distribution with a mean of zero: \(\epsilon \sim \mathcal{N}(0, \sigma^2)\).

We can generalize the simple linear regression model to include multiple covariates, to model more complex relationships. This allows us to consider the relationship between the response variable and multiple predictors simultaneously. The model with \(p\) covariates can be written as:

where:

\(y_i\) is the response variable for the \(i\)-th observation

\(x_{i1}, x_{i2}, ..., x_{ip}\) are the values of the \(p\) covariates for the \(i\)-th observation

\(\beta_0, \beta_1, \beta_2, ..., \beta_p\) are the regression coefficients, including the intercept (\(\beta_0\))

\(\epsilon_i\) is the error term for the \(i\)-th observation

This can be expressed more compactly using summation notation:

Note that \(\beta_0 = \beta_0 \times 1\), so this coefficient can be integrated into the sum, therefore the regression function can be expressed in matrix notation as:

where:

\(\mathbf{x}_i = \begin{bmatrix} 1 & x_{i1} & x_{i2} & \dots & x_{ip} \end{bmatrix}^T\) is the covariate vector for the \(i\)-th observation, including 1 for the intercept

\(\boldsymbol{\beta} = \begin{bmatrix} \beta_0 & \beta_1 & \beta_2 & \dots & \beta_p \end{bmatrix}^T\) is the vector of regression coefficients, including the intercept

In linear models, the function \(f(\mathbf{x}_i, \boldsymbol{\beta})\) is designed to model how the average value of \(y\) changes with \(x\). The error term \(\epsilon\) accounts for the fact that individual data points will deviate from this average due to random variability. Essentially, we’re using a linear function to model the mean response, while acknowledging that there will always be some unpredictable variation around that mean.

Finally, the relationship between the response variable and multiple predictors for all observations in the dataset can be expressed even more concisely in matrix notation as:

where:

\(\mathbf{y} = \begin{bmatrix} y_1 \\ y_2 \\ \vdots \\ y_n \end{bmatrix}\) is the vector of response variables

\(\mathbf{X} = \begin{bmatrix} 1 & x_{11} & x_{12} & \dots & x_{1p} \\ 1 & x_{21} & x_{22} & \dots & x_{2p} \\ \vdots & \vdots & \vdots & \ddots & \vdots \\ 1 & x_{n1} & x_{n2} & \dots & x_{np} \end{bmatrix}\) is the design matrix

\(\boldsymbol{\beta} = \begin{bmatrix} \beta_0 \\ \beta_1 \\ \beta_2 \\ \vdots \\ \beta_p \end{bmatrix}\) is the vector of regression coefficients

\(\boldsymbol{\epsilon} = \begin{bmatrix} \epsilon_1 \\ \epsilon_2 \\ \vdots \\ \epsilon_n \end{bmatrix}\) is the vector of error terms

Least squares#

How do we actually find the “best” values for the parameters that accurately capture this relationship? Ordinary least squares (OLS) provides a method for estimating these coefficients by minimizing the sum of the squared differences (the sum of squared errors (SSE), or sum of squared residuals) between the observed data points and the values predicted by the regression line:

\(S(\boldsymbol{\beta})\) is a measure of the overall discrepancy between the observed data points (\(y_i\)) and the values predicted by the regression model. Squaring the residuals ensures that positive and negative differences contribute equally to the overall measure of discrepancy. In simpler terms, imagine we have a scatter plot of data points and we draw a line through them. \(S(\boldsymbol{\beta})\) represents the total sum of the squared vertical distances between each point and the line. OLS aims to find the line, i.e., the values of \(\boldsymbol{\beta}\), that makes this total distance as small as possible. Mathematically, we’re looking for:

The ‘hat’ notation is used to denote an estimate of a true value. So, \(\hat{\beta}\) represents the estimated value of the regression coefficients, while \(\hat{y}\) represents the estimated or predicted value of the response variable based on our model.

Using the vector and matrix notation, we can write:

When the system is overdetermined, i.e., more data points than coefficients, an analytical solution exists for \(\boldsymbol{\beta}\). Indeed, we can find this minimum by taking the derivative of \(S(\boldsymbol{\beta})\) with respect to \(\boldsymbol{\beta}\), setting it to zero, and solving for \(\boldsymbol{\beta}\). This process is similar to finding the minimum point of a curve, where the slope, i.e., the derivative, is zero.

More formally, we calculate the gradient of the function \(S(\boldsymbol{\beta})\): \(\nabla_{\boldsymbol{\beta}} S(\boldsymbol{\beta})\). This gradient is a vector containing the partial derivatives of \(S(\boldsymbol{\beta})\) with respect to each element of \(\boldsymbol{\beta}\). Setting this gradient to zero, i.e., \(\nabla_{\boldsymbol{\beta}} S(\boldsymbol{\beta})\), gives us a system of equations that we can solve to find the values of \(\boldsymbol{\beta}\) that minimize \(S(\boldsymbol{\beta})\):

In simple linear regression with one predictor variable, the design matrix \(\mathbf{X}\) and the parameter vector \(\boldsymbol{\beta}\) have the following forms:

To apply the matrix formula \(\hat{\boldsymbol{\beta}} = (\mathbf{X}^T \mathbf{X})^{-1} \mathbf{X}^T \mathbf{y}\), we need to calculate different parts:

Substituing these components back into the matrix equation and perform the matrix multiplication and inverse to obtain the following equations for \(\hat{\beta_0}\) and \(\hat{\beta_1}\):

where:

\(\bar{x}\) is the mean of the predictor variable \(x\)

\(\bar{y}\) is the mean of the response variable \(y\)

\(s_{xy}\) is the covariance between \(x\) and \(y\)

\(s^2_x\) is the variance of \(x\)

These equations provide a direct way to calculate the intercept and slope in simple linear regression. Recall that covariance measures the direction and strength of the linear relationship between two variables, and variance measures the spread or variability of a single variable. The slope estimate (\(\hat{\beta}_1\)) is the ratio of the covariance between \(x\) and \(y\) to the variance of \(x\). The intercept estimate (\(\hat{\beta}_0\)) is then calculated based on the slope and the means of \(x\) and \(y\).

Calculating coefficients in Python#

Now that we’ve explored the theoretical foundations of simple linear regression and how to estimate the coefficients using OLS, let’s dive into some practical applications using Python. We’ll use the same dataset from the previous chapter on correlation analysis, where we examined the relationship between insulin sensitivity and the percentage of C20-22 fatty acids in muscle phospholipids.

Dataset#

In the previous chapter, we explored the relationship between insulin sensitivity and the percentage of C20-22 fatty acids in muscle phospholipids using data from Borkman and colleagues. We visualized this relationship with scatterplots and quantified its strength and direction using correlation coefficients. Remember that correlation analysis treats both variables symmetrically, and it doesn’t assume a cause-and-effect relationship or a direction of influence.

Now, we’ll take this exploration a step further with simple linear regression. Instead of just describing the association, we’ll build a model that predicts insulin sensitivity based on the percentage of C20-22 fatty acids. This implies a directional relationship, where we’re specifically interested in how changes in fatty acid composition might affect insulin sensitivity.

While correlation provides a valuable starting point for understanding the relationship between two variables, linear regression offers a more powerful framework for modeling and predicting that relationship:

Estimate the strength and direction of the effect: we can quantify how much insulin sensitivity is expected to change for a given change in fatty acid composition.

Make predictions: we can use the model to predict insulin sensitivity for new individuals based on their fatty acid levels.

Test hypotheses:we can formally test whether there is a statistically significant relationship between the variables.

import pandas as pd

# Example data from the book, page 319 (directly into a DataFrame)

data = pd.DataFrame({

# percentage of C20-22 fatty acids

'per_C2022_fatacids': [17.9, 18.3, 18.3, 18.4, 18.4, 20.2, 20.3, 21.8, 21.9, 22.1, 23.1, 24.2, 24.4],

# insulin sensitivity index

'insulin_sensitivity': [250, 220, 145, 115, 230, 200, 330, 400, 370, 260, 270, 530, 375],

})

data.head()

| per_C2022_fatacids | insulin_sensitivity | |

|---|---|---|

| 0 | 17.9 | 250 |

| 1 | 18.3 | 220 |

| 2 | 18.3 | 145 |

| 3 | 18.4 | 115 |

| 4 | 18.4 | 230 |

SciPy#

The SciPy package provides basic statistical functions, including a function for simple linear regression.

from scipy.stats import linregress

# Load the data

X = data['per_C2022_fatacids']

y = data['insulin_sensitivity']

# Calculate the coefficients (we will discuss the metrics in a subsequent section)

results_scipy = linregress(X, y)

slope_scipy, intercept_scipy, *_ = results_scipy # Extract the coefficients

# print(res) # Print the entire result set

print("Intercept:", intercept_scipy)

print("Slope:", slope_scipy)

Intercept: -486.54199459921034

Slope: 37.20774574745539

The linregress function provides the estimated coefficients for our regression model. In this case, we find a slope of 37.2. This means that for every 1 unit increase in the percentage of C20-22 fatty acids in muscle phospholipids, we expect, on average, a 37.2 mg/m²/min increase in insulin sensitivity.

The intercept value we obtain is -486.5. However, in this specific example, the intercept doesn’t have a clear biological interpretation. It represents the estimated insulin sensitivity when the percentage of C20-22 fatty acids is zero, which is not a realistic scenario in this context. It’s important to remember that extrapolating the linear model beyond the range of the observed data can lead to unreliable conclusions.

These results suggest a positive association between the percentage of C20-22 fatty acids and insulin sensitivity within the observed data range. However, to fully understand the reliability and significance of this relationship, we’ll need to further explore the model’s fit and perform statistical inference.

Pingouin#

The pingouin.linear_regression function provides a more user-friendly output than SciPy, presenting the regression results in a convenient table format. While SciPy returns only the core statistics, i.e., slope, intercept, r-value, p-value, and standard errors, Pingouin provides a more comprehensive output, including additional information such as t-values, adjusted r-squared, and confidence intervals for the coefficients, all organized in a clear and easy-to-read table.

import pingouin as pg

model_pingouin = pg.linear_regression(

X=X,

y=y,

remove_na=True,

coef_only=False, # Otherwise returns only the intercept and slopes values

as_dataframe=True, # Otherwise returns a dictionnay of results

alpha=.05,

)

pg.print_table(model_pingouin)

names coef se T pval r2 adj_r2 CI[2.5%] CI[97.5%]

------------------ -------- ------- ------ ------ ----- -------- ---------- -----------

Intercept -486.542 193.716 -2.512 0.029 0.593 0.556 -912.908 -60.176

per_C2022_fatacids 37.208 9.296 4.003 0.002 0.593 0.556 16.748 57.668

The Pingouin output provides us with more detailed information about the regression model, including the 95% confidence interval for the slope and the intercept. This interval, ranging from 16.748 to 57.668, gives us a range of plausible values for the true slope of the relationship between %C20-22 fatty acids and insulin sensitivity in the population. The fact that this interval does not include zero provides further support for a relationship between these variables. If the interval included zero, it would suggest that the observed slope could plausibly be due to chance alone.

We also see that the R-squared value is 0.593. This means that 59% of the variability in insulin sensitivity can be explained by the linear relationship with %C20-22 fatty acids. The remaining 41% of variability could be due to other factors not included in the model, measurement error, or simply natural biological variation. R² and other metrics will be discussed in more details in the next section.

Finally, the P value associated with the slope is 0.002. This P value tests the null hypothesis that there is no relationship between %C20-22 fatty acids and insulin sensitivity. In this case, the very small P value provides strong evidence against the null hypothesis, suggesting that the observed relationship is unlikely to be due to random chance.

We’ll discuss the calculation and interpretation of standard errors, confidence intervals and P values in more detail later in the chapter.

Statsmodels#

While SciPy and Pingouin provide tools for linear regression, they don’t offer a comprehensive summary output like the one we see in Statsmodels. Statsmodels, with its focus on statistical modeling, provides a dedicated OLS class (within the statsmodels.regression.linear_model module) that not only calculates the regression coefficients but also generates a detailed summary table containing a wide range of statistical information about the model.

In order to utilize the convenience of R-style formulas for defining our regression model, we import the formula.api module from statsmodels and assign it the alias smf. This allows us to specify the model in a more concise and readable way, similar to how it’s done in the R programming language.

import statsmodels.formula.api as smf

import warnings

# Suppress all UserWarnings, incl. messages related to small sample size

warnings.filterwarnings("ignore", category=UserWarning)

model_statsmodels = smf.ols("insulin_sensitivity ~ per_C2022_fatacids", data=data)

results_statsmodels = model_statsmodels.fit()

#print(results.summary()) # Classical output of the result table

print(results_statsmodels.summary2())

Results: Ordinary least squares

=======================================================================

Model: OLS Adj. R-squared: 0.556

Dependent Variable: insulin_sensitivity AIC: 151.2840

Date: 2024-12-12 12:11 BIC: 152.4139

No. Observations: 13 Log-Likelihood: -73.642

Df Model: 1 F-statistic: 16.02

Df Residuals: 11 Prob (F-statistic): 0.00208

R-squared: 0.593 Scale: 5760.1

-----------------------------------------------------------------------

Coef. Std.Err. t P>|t| [0.025 0.975]

-----------------------------------------------------------------------

Intercept -486.5420 193.7160 -2.5116 0.0289 -912.9081 -60.1759

per_C2022_fatacids 37.2077 9.2959 4.0026 0.0021 16.7475 57.6680

-----------------------------------------------------------------------

Omnibus: 3.503 Durbin-Watson: 2.172

Prob(Omnibus): 0.173 Jarque-Bera (JB): 1.139

Skew: 0.023 Prob(JB): 0.566

Kurtosis: 1.550 Condition No.: 192

=======================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is

correctly specified.

The statsmodels OLS summary provides a wealth of information about the fitted regression model in three key sections:

Model summary - This section provides an overall assessment of the model’s fit

Coefficients table - This table provides detailed information about each coefficient

Model diagnostics - This section includes several diagnostic tests to assess the validity of the model assumptions

By carefully examining these different sections of the statsmodels OLS summary, we can gain a comprehensive understanding of the fitted regression model, its performance, and the validity of its underlying assumptions.

We can access each of these sections individually using the tables attribute, which is a list containing each table as a separate element. In addition, the params attribute of the fitted statsmodels object stores the estimated regression coefficients. For example, to access the table with the coefficients, their standard errors, t-values, P values, and confidence intervals.

# Get the coefficients table

coef_table_statsmodels = results_statsmodels.summary().tables[1]

# Print the coefficients table

print(coef_table_statsmodels)

======================================================================================

coef std err t P>|t| [0.025 0.975]

--------------------------------------------------------------------------------------

Intercept -486.5420 193.716 -2.512 0.029 -912.908 -60.176

per_C2022_fatacids 37.2077 9.296 4.003 0.002 16.748 57.668

======================================================================================

By carefully examining the coefficient table, we can gain valuable insights into the magnitude, significance, and uncertainty of the relationships between the predictor variables and the response variable:

‘coef’: this column displays the estimated values of the regression coefficients. In simple linear regression, we have two coefficients: the intercept (

Intercept) and the slope associated with the predictor variable (in our case,per_C2022_fatacids).‘std err’: this column shows the standard errors of the coefficient estimates. The standard error is a measure of the uncertainty or variability associated with each estimate. Smaller standard errors indicate more precise estimates.

‘t’: this column presents the t-values for each coefficient. The t-value is calculated by dividing the coefficient estimate by its standard error. It’s used to test the null hypothesis that the true coefficient is zero.

‘P>|t|’: this column displays the P values associated with the t-tests. The P value represents the probability of observing the estimated coefficient (or a more extreme value) if the true coefficient were actually zero. A low P value (typically below 0.05) suggests that the predictor variable has a statistically significant effect on the response variable.

‘[0.025 0.975]’: this column shows the 95% confidence intervals for the true values of the coefficients. The confidence interval provides a range of plausible values within which we can be 95% confident that the true coefficient lies.

Note that the calculation of the standard errors, t-values, P values, and confidence intervals in this table relies on the assumption that the errors in the regression model are normally distributed. We will discuss these values later in this chapter.

We can also directly display the estimated intercept and slope.

# Print the parameters

print("The parameters of the model")

print(results_statsmodels.params)

The parameters of the model

Intercept -486.541995

per_C2022_fatacids 37.207746

dtype: float64

NumPy#

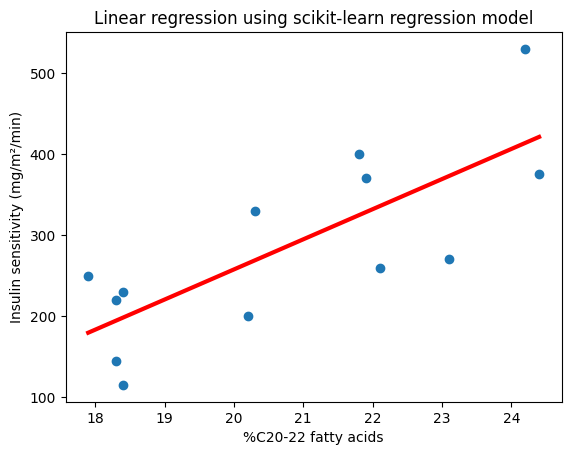

NumPy offers the numpy.polyfit function that fits a polynomial of a specified degree to the data. In our case, we use deg=1 to fit a straight line (a polynomial of degree 1). It returns an array of polynomial coefficients with the highest power first, i.e., slope followed by intercept. The function integrates well with plotting libraries like Matplotlib. We can easily use the returned coefficients to generate the equation of the line and plot it alongside the data.

So for simple linear regression, numpy.polyfit can be more concise than SciPy, Pingouin or statsmodels, especially if the primary goal is to obtain the coefficients for plotting or basic calculations.

import numpy as np

import matplotlib.pyplot as plt

# Fit the model using numpy.polyfit

coefficients_numpy = np.polyfit(X, y, deg=1) # deg=1 specifies a linear fit (degree 1)

slope_numpy, intercept_numpy = coefficients_numpy

print("Slope (NumPy):", slope_numpy)

print("Intercept (NumPy):", intercept_numpy)

# Plot the data and the fitted line

plt.scatter(X, y)

plt.plot(X, slope_numpy * X + intercept_numpy, color="red", lw=3)

plt.xlabel("%C20-22 fatty acids")

plt.ylabel("Insulin sensitivity (mg/m²/min)")

plt.title("Linear regression of insulin sensitivity on %C20-22 fatty acids");

Slope (NumPy): 37.20774574745538

Intercept (NumPy): -486.54199459921

Assessing model fit#

Now that we’ve visualized the relationship between insulin sensitivity and %C20-22 fatty acids and explored different ways to estimate the regression coefficients, it’s crucial to assess how well our model actually fits the data. This step helps us determine how effectively the model captures the underlying relationship and how reliable it is for making predictions.

The Statsmodels library provides a comprehensive summary output that offers valuable insights into these aspects. Let’s take a look at the first table from this output, which provides an overall summary of the model’s fit.

# Get the coefficients table

assessment_table_statsmodels = results_statsmodels.summary().tables[0]

# Print the coefficients table

print(assessment_table_statsmodels)

OLS Regression Results

===============================================================================

Dep. Variable: insulin_sensitivity R-squared: 0.593

Model: OLS Adj. R-squared: 0.556

Method: Least Squares F-statistic: 16.02

Date: Thu, 12 Dec 2024 Prob (F-statistic): 0.00208

Time: 12:11:17 Log-Likelihood: -73.642

No. Observations: 13 AIC: 151.3

Df Residuals: 11 BIC: 152.4

Df Model: 1

Covariance Type: nonrobust

===============================================================================

The model summary provides an overall assessment of the fitted regression model:

‘R-squared’: this familiar metric denoted as R², also known as the coefficient of determination, quantifies the proportion of variance in the response variable that is explained by the predictor variable(s). It ranges from 0 to 1, where a higher value indicates a better fit.

‘Adj. R-squared’: the adjusted R² is a modified version of R² that takes into account the number of predictors in the model. It penalizes the addition of unnecessary predictors that don’t significantly improve the model’s explanatory power, helping to prevent overfitting.

‘F-statistic’: this statistic tests the overall significance of the regression model. It assesses whether at least one of the predictor variables has a non-zero coefficient, meaning it has a statistically significant effect on the response variable. This is conceptually similar to the F-test we encountered in the chapter on comparing two unpaired means, where we tested for the equality of variances.

‘Prob (F-statistic)’: this is the p-value associated with the F-statistic. A low P value (typically below 0.05) provides evidence that the model as a whole is statistically significant, meaning that at least one of the predictors is likely to have a real effect on the response variable.

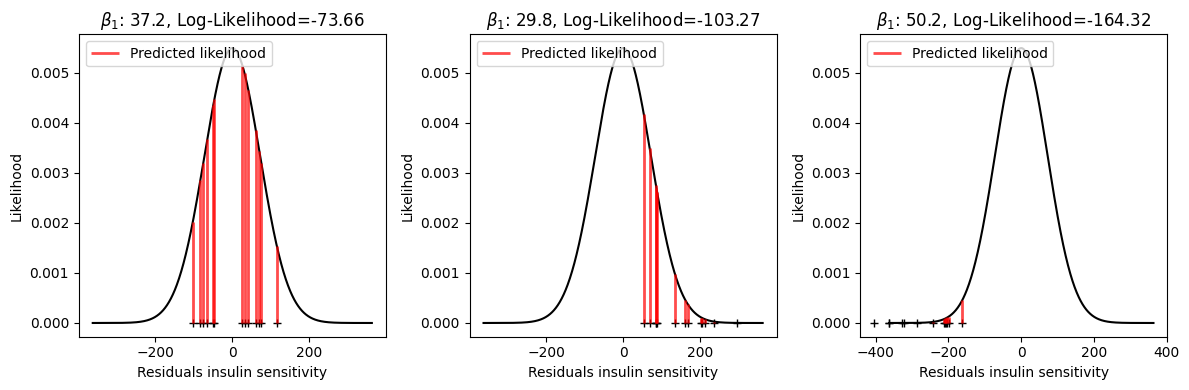

‘Log-Likelihood’: this value reflects how well the model fits the observed data, assuming that the errors are normally distributed. A higher log-likelihood indicates a better fit.

‘AIC’: the Akaike Information Criterion (AIC) is a measure of model fit that penalizes models with more parameters. It’s useful for comparing different models, even if they are not nested. Lower AIC values generally indicate a better balance between model fit and complexity. We encountered AIC before in the chapter on comparing survival curves, where it provided valuable guidance in model selection.

‘BIC’: the Bayesian Information Criterion (BIC) is similar to AIC but imposes a stronger penalty for models with more parameters. Like AIC, lower BIC values are generally preferred.

By examining these summary statistics, we can gain an overall understanding of the model’s performance, its explanatory power, and its statistical significance.

R-squared#

In the model summary table, we see several metrics that provide insights into the model’s fit. One of the most commonly used metrics is R², which quantifies the proportion of variance in the response variable explained by the predictor variable.

Note that, while sometimes denoted as r² in the context of simple linear regression, we’ll use R² throughout this chapter for consistency.

We can extract the correlation of coefficient (Pearson’s r) calculated by scipy.stats.linregress and square it, or get the R² attribute of the fitted Statsmodel object and the Pingouin’s result table.

# Calculate R-squared from scipy.stats results

r_value_scipy = results_scipy.rvalue # type: ignore

r_squared_scipy = r_value_scipy**2

# Print R-squared values from different packages

print(f"R² (SciPy):\t {r_squared_scipy:.4f}")

print(f"R² (Pingouin):\t {model_pingouin.r2.iloc[1]:.4f}")

R² (SciPy): 0.5929

R² (Pingouin): 0.5929

While Python libraries provide convenient ways to obtain R², it’s also helpful to understand how it’s calculated manually. This provides deeper insight into the meaning of R² and its connection to the concept of variance.

Recall from the previous chapter that Pearson’s correlation coefficient is calculated by dividing the covariance of two variables by the product of their standard deviations. R² is simply the square of this correlation coefficient:

Let’s define the total sum of squares (TSS) as a measure of the total variability of the response variable (y) around its mean, the residual sum of squares (RSS), also called sum of squared residuals (SSR), as a measure of the variability in y that is not explained by the regression model:

where \(y_i\) is the \(i\)-th observed value of \(y\), \(\bar{y}\) is the mean of \(y\), and \(\hat{y}_i\) is the \(i\)-th predicted/estimated value of \(y\) from the regression model.

The key connection is that the variance of y (\(s^2_y\)) is directly proportional to TSS, as we have seen in the chapter about the quantification of scatter of continuous data with the definition of the sample variance:

where \(n\) is the number of observations. Moreover, we can substitute the expression of the predicted value fror the \(i\)-th observation into the RSS formula using the OLS estimates for the intercept and slope:

The code snippet below demonstrates how to calculate R² manually using the compute_rss and estimate_y functions. It uses the intercept and slope estimated coefficients from the NumPy results to calculate the predicted values, and subsequently the RSS. Finally, it calculates TSS and uses both RSS and TSS to compute R-squared.

# Define functions to compute RSS and estimate y

def compute_rss(y_estimate, y):

return sum(np.power(y - y_estimate, 2))

def estimate_y(x, b_0, b_1):

return b_0 + b_1 * x

# Calculate RSS and TSS

rss = compute_rss(estimate_y(X, intercept_numpy, slope_numpy), y)

tss = np.sum(np.power(y - y.mean(), 2))

# Calculate and print R-squared

print(f"TSS (manual calculation): {tss:.4f}")

print(f"RSS (manual calculation): {rss:.4f}")

print(f"R² using TSS and RSS: {1 - rss/tss:.4f}")

TSS (manual calculation): 155642.3077

RSS (manual calculation): 63361.3740

R² using TSS and RSS: 0.5929

We can also access the R², TSS, and RSS values directly from the Statsmodels results object using its rsquared, centered_tss, and ssr attributes, respectively. This provides a convenient way to retrieve these key metrics for further analysis or comparison.

# Print R², TSS, and RSS from statsmodels attributes

print(f"TSS (Statsmodels): {results_statsmodels.centered_tss:.4f}")

print(f"RSS (Statsmodels): {results_statsmodels.ssr:.4f}")

print(f"R² (Statsmodels): {results_statsmodels.rsquared:.4f}")

TSS (Statsmodels): 155642.3077

RSS (Statsmodels): 63361.3740

R² (Statsmodels): 0.5929

To assess how well our regression model explains the outcome (insulin sensitivity), we can compare it to a simple baseline model: predicting the mean. Imagine we have no information about the predictor variable (%C20-22 fatty acids). In this case, the best prediction we could make for any individual’s insulin sensitivity would be the overall mean insulin sensitivity.

This “mean model” is represented by a horizontal line on the scatter plot, and the deviations of the data points from this line represent the residuals around the mean. The variance of the response variable, denoted as \(s^2_y\), quantifies the average squared error of this mean model.

By comparing the variability explained by our regression model (captured by R²) to the variability around the mean (captured by \(s^2_y\)), we can gauge how much better our model performs than simply predicting the mean.

We’ll explore this concept in more detail and discuss other methods for comparing models in the next chapter. For now let’s have a look at other metrics can help us assess model fit.

Adjusted R-squared#

While R² provides a useful measure of how well a model fits the data, it has a drawback: it always increases when we add more predictors to the model, even if those predictors don’t truly improve the model’s explanatory power. This can lead to overfitting, where the model performs well on the training data but poorly on new, unseen data.

Adjusted R² (\(R^2_a\)) addresses this issue by taking into account the number of predictors in the model. It penalizes the addition of unnecessary predictors that don’t contribute significantly to explaining the variation in the response variable. This helps to prevent overfitting and provides a more realistic assessment of the model’s goodness-of-fit.

The formula for adjusted R² is:

where \(R^2\) is the R-squared value, \(n\) is the number of observations in the dataset, and \(p\) is the number of predictor variables in the model.

Note that \(n - p - 1\) is the degrees of freedom of the residuals. It accounts for the fact that we’ve estimated \(p + 1\) parameters (including the intercept) in the regression model. In simple linear regression (where \(p = 1\)), we’ve estimated two parameters, the intercept and the slope. Using degrees of freedom provides a more accurate estimate of the population variance of the errors. When we estimate parameters from the data, we lose some degrees of freedom, as the estimated parameters impose constraints on the residuals. We can extract the degrees of freedom of the residuals from the fitting model results.

Adjusted R² can be interpreted as the proportion of the variance in the response variable explained by the predictors, adjusted for the number of predictors. It ranges from \(-\inf\) to 1, where a higher value indicates a better fit. Unlike R², adjusted R² can decrease if adding a new predictor doesn’t improve the model’s explanatory power sufficiently to offset the penalty for increased complexity.

# Get number of observations

n = results_statsmodels.nobs # Number of observations; also n=len(data)

#p = 1 # Number of predictors in simple linear regression

df_residuals = results_statsmodels.df_resid # degrees of freedom of the residuals

# Calculate adjusted R²

#adjusted_r_squared = 1 - (1 - r_squared_scipy) * (n - 1) / (n - p - 1)

adjusted_r_squared = 1 - (1 - r_squared_scipy) * (n - 1) / df_residuals

# Print adjusted R² from manual calculation, and from Pingouin and Statsmodels

print(f"Adjusted R² (manual):\t {adjusted_r_squared:.4f}")

print(f"Adjusted R² (pingouin):\t {model_pingouin.adj_r2[1]:.4f}")

print(f"Adjusted R² (Statsmodels):{results_statsmodels.rsquared_adj:.4f}")

Adjusted R² (manual): 0.5559

Adjusted R² (pingouin): 0.5559

Adjusted R² (Statsmodels):0.5559

In our simple linear regression example, the adjusted R² is slightly lower than the R² value. This is because adjusted R² applies a small penalty even for having one predictor. However, the primary interpretation of R², i.e., the proportion of variance explained, remains the same. The true benefit of adjusted R² will become clearer when we compare models with multiple predictors in later chapters.

Mean squared error#

Mean Squared Error (MSE) is a common metric used to quantify the accuracy of a regression model. It measures the average of the squared differences between the actual (observed) values and the values predicted by the model:

MSE is closely related to the RSS that we discussed earlier. The only difference is the scaling factor (degrees of freedom of the residuals):

where \(n - p - 1\) is the degrees of freedom of the residuals, as defined for \(R^2_a\). MSE essentially calculates the average squared “error” of the model’s predictions. Squaring the errors ensures that positive and negative errors contribute equally to the overall measure. A lower MSE indicates that the model’s predictions are, on average, closer to the actual values, suggesting a better fit.

Because MSE squares the errors, it gives more weight to larger errors. This can be useful if you want to penalize larger deviations more heavily. MSE has desirable mathematical properties that make it convenient for optimization and analysis. It’s a widely used and well-understood metric in regression analysis.

However, MSE is expressed in squared units of the response variable, which can be less intuitive for interpretation. And like other metrics based on squared errors, MSE can be sensitive to outliers, as large errors have a disproportionate impact on the overall value.

Despite these limitations, MSE remains a valuable tool for assessing the accuracy of regression models and comparing their performance, as we will see in the next chapter.

The mse_resid attribute of the fitted statsmodels result object directly gives the MSE of the residuals. We can also connect this to the RSS by dividing RSS by the number of observations.

# Access MSE directly

mse = results_statsmodels.mse_resid

# Calculate MSE from RSS

mse_from_rss = rss / df_residuals

print(f"MSE (Statsmodels):\t {mse:.2f}")

print(f"MSE (from RSS and DF):\t {mse_from_rss:.2f}")

MSE (Statsmodels): 5760.12

MSE (from RSS and DF): 5760.12

Root mean squared error#

Root Mean Squared Error (RMSE) is another commonly used metric to evaluate the accuracy of a regression model. It’s closely related to MSE but offers a more interpretable measure of error. RMSE is simply the square root of MSE:

RMSE represents the average magnitude of the errors in the model’s predictions, expressed in the same units as the response variable. It can be interpreted as the “typical” or “standard” deviation of the residuals. A lower RMSE indicates that the model’s predictions are, on average, closer to the actual values, suggesting a better fit.

The main advantage of RMSE over MSE is that it’s in the same units as the response variable. This makes it easier to understand the magnitude of the errors in a meaningful way. RMSE allows for easier comparison of models with different response variables, as the errors are expressed in the original units. RMSE is directly derived from MSE, so they both measure the same underlying concept: the average magnitude of the errors. However, RMSE provides a more interpretable representation of this error.

RMSE is a valuable metric for evaluating regression models due to its interpretability in the original units of the response variable. It provides a clear and understandable measure of the typical error made by the model, making it easier to assess and compare model performance.

# Calculate RMSE from MSE

rmse = np.sqrt(mse)

print("RMSE:", rmse)

RMSE: 75.89548673252867

Assumptions and diagnostics#

Assumptions for linear regression#

We’ve explored how to fit a simple linear regression model and assess its overall fit using metrics like R² and MSE. Now, let’s delve deeper into the assumptions underlying this model and how we can check for potential problems. These assumptions are essential for ensuring that our results are valid and reliable.

In the previous chapter on correlation analysis, we discussed several important concepts that are also relevant for linear regression. These include:

Linearity: the relationship between the predictor variable and the response variable is linear.

Normality: the errors are normally distributed.

Sphericity: this assumption refers to the structure of the variance-covariance matrix of the errors. In simpler terms, it means that the variability of the errors is constant across all values of the predictors (homoscedasticity) and that the errors are not correlated with each other (no autocorrelation). In the context of simple linear regression with only one predictor, sphericity essentially boils down to homoscedasticity. However, in multiple regression, sphericity also encompasses the absence of correlations between the errors associated with different predictors.

Absence of outliers: outliers can have a disproportionate influence on the results, so it’s important to identify and handle them appropriately.

While these were crucial for interpreting correlation coefficients, they are even more critical in the context of linear regression. Violations of these assumptions can affect the accuracy of the estimated coefficients, standard errors, confidence intervals, and P values, potentially leading to misleading conclusions.

Beyond the points mentioned above, there are a few more subtle yet important assumptions that underpin the validity of our analysis:

Correct specification: this means that the chosen model (simple linear regression in our case) accurately reflects the true relationship between the variables. If the true relationship is non-linear or involves other predictors not included in our model, our results might be misleading.

Strict exogeneity: this assumption states that the predictor variables are not correlated with the error term. Violating this assumption can lead to biased estimates of the coefficients.

No perfect multicollinearity: this assumption is more relevant in multiple regression, where we have multiple predictors. It states that none of the predictors should be a perfect linear combination of the others. If there is perfect multicollinearity, it becomes impossible to estimate the unique effects of individual predictors.

While normality of the errors is not strictly required for estimating the coefficients, it is essential for valid statistical inference, such as calculating confidence intervals and performing hypothesis tests.

To assess these assumptions, we’ll primarily utilize graphical methods, such as residual plots, histograms, and Q-Q plots. These visual tools provide an intuitive way to identify potential issues. Additionally, we’ll take advantage of the convenient diagnostic table provided by Statsmodels, which offers a concise summary of several key statistical tests.

Residual analysis#

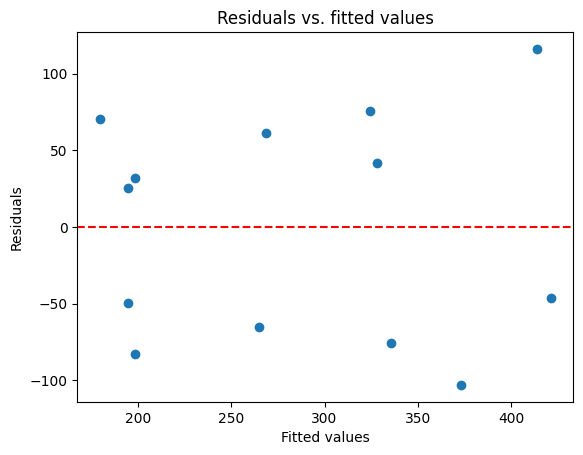

Residual analysis is a cornerstone of evaluating regression models. By examining the residuals, i.e., the differences between the observed and predicted values, we can gain valuable insights into the model’s adequacy and potential areas for improvement.

Recall that the residuals represent the “unexplained” portion of the data, the variation that our model doesn’t capture. If the model is appropriate and its assumptions hold, we expect the residuals to behave randomly.

Residual plots#

One of the most informative ways to assess the residuals is by plotting them against the predicted values (or the predictor variable itself). This is a standard diagnostic plot in regression analysis. Ideally, we should see a random scatter of points around zero, with no discernible patterns. Here are some common patterns to watch out for in residual plots:

Non-linearity: if the points form a curve or a U-shape, it suggests that the relationship between the variables might not be linear. This indicates that a simple linear model might not be appropriate, and we might need to consider a more complex model or transformations of the variables.

Heteroscedasticity: if the spread of the residuals changes systematically across the range of fitted values (e.g., fanning out or forming a cone shape), it indicates heteroscedasticity (non-constant variance). This violates one of the key assumptions of linear regression and can affect the reliability of our inferences.

Outliers: points that lie far away from the others might be outliers. These outliers can have a disproportionate influence on the regression line and can distort the results. Identifying potential outliers through residual analysis can guide us towards further investigation or specific methods for handling them.

# Get the residuals and fitted values

residuals = results_statsmodels.resid

fitted_values = results_statsmodels.fittedvalues

# Residuals vs. fitted values plot

plt.scatter(fitted_values, residuals)

plt.axhline(y=0, color='r', linestyle='--') # Add a horizontal line at zero

plt.xlabel("Fitted values")

plt.ylabel("Residuals")

plt.title("Residuals vs. fitted values")

plt.show()

This plot is similar to the sns.residplot we used in the previous chapter, as both visualize the residuals against the fitted values. However, we’re now creating the plot manually using Matplotlib to demonstrate how to access and work with the residuals directly from the Statsmodels results.

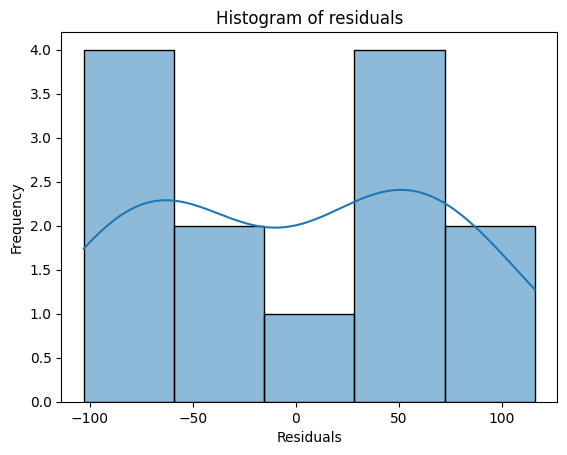

Histograms#

In addition to plotting the residuals against the predicted values, we can also examine the distribution of the residuals using a histogram. This can provide insights into whether the residuals are approximately normally distributed, which is one of the key assumptions of linear regression.

If the model’s assumptions hold, we expect the histogram of residuals to resemble a bell-shaped curve, indicating a normal distribution. However, if the histogram shows significant skewness (asymmetry) or kurtosis (peakedness or flatness), it might suggest deviations from normality. For example, if the histogram of residuals is heavily skewed to the right, it indicates that the model tends to underpredict the response variable more often than it overpredicts it. This could suggest a need for transformations or a different modeling approach.

import seaborn as sns

# Histogram of residuals

sns.histplot(residuals, kde=True) # Add a kernel density estimate for smoother visualization

plt.xlabel("Residuals")

plt.ylabel("Frequency")

plt.title("Histogram of residuals");

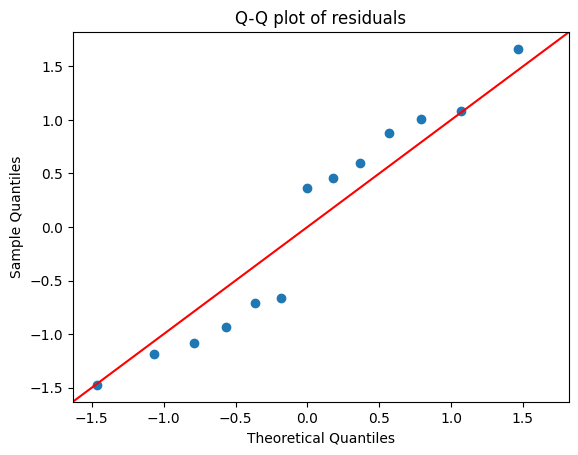

Q-Q plots#

While histograms provide a general visual impression of the distribution of residuals, quantile-quantile (Q-Q) plots offer a more precise way to assess whether the residuals follow a normal distribution.

As we have already seen in a previous chapter on normality and outlier detection, a Q-Q plot compares the quantiles of the residuals to the quantiles of a standard normal distribution. The Q-Q plot plots the quantiles of the residuals on the y-axis against the corresponding quantiles of the standard normal distribution on the x-axis.

If the residuals are normally distributed, the points on the Q-Q plot will fall approximately along a straight diagonal line. But deviations from the straight line indicate departures from normality, in particular:

S-shaped curve suggests that the residuals have heavier tails than a normal distribution (more extreme values)

U-shaped curve indicates lighter tails than a normal distribution (fewer extreme values)

Points above the line indicate that the residuals are skewed to the right (positive skew)

Points below the line indicate that the residuals are skewed to the left (negative skew)

For example, if the points on the Q-Q plot deviate substantially from the straight line at both ends, it suggests that the residuals have heavier tails than a normal distribution. This might indicate the presence of outliers or a distribution with more extreme values than expected in a normal distribution.

from statsmodels.api import qqplot

# Q-Q plot of residuals

qqplot(residuals, fit=True, line='45') # Assess whether the residuals follow a normal distribution

plt.title("Q-Q plot of residuals");

While we’ve used Statsmodels to create the Q-Q plot here, remember that we can also utilize other libraries or even implement it manually, as we explored in a previous chapter. Both SciPy and Pingouin offer functions for generating Q-Q plots, each with its own set of customization options.

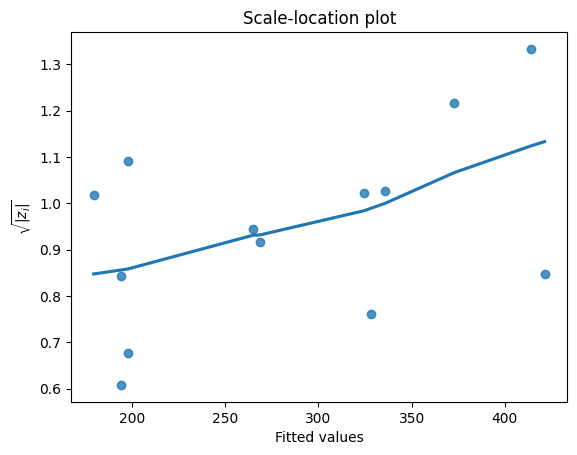

Scale-location plot#

The scale-location plot, also known as the spread-location plot, is another useful visualization for assessing the assumption of homoscedasticity. This plot helps us determine if the residuals are spread equally along the ranges of predictors.

Ideally, we want to see a horizontal line with equally (randomly) spread points in the scale-location plot. This indicates that the variability of the residuals is roughly constant across the range of predicted values, supporting the assumption of homoscedasticity.

However, if we observe a pattern where the spread of the residuals increases or decreases systematically with the predicted values, e.g., a funnel shape, it suggests heteroscedasticity. This means that the variability of the errors is not constant, which can violate the assumptions of linear regression and affect the reliability of our inferences.

For example, if the scale-location plot shows an upward trend, it suggests that the variability of the residuals increases as the predicted values increase. This might indicate that our model is less accurate for predicting higher values of the response variable.

# Retrieve a specific type of residual that is more robust for identifying outliers, more info oon the link below

# https://www.statsmodels.org/dev/generated/statsmodels.stats.outliers_influence.OLSInfluence.get_resid_studentized_external.html

model_norm_residuals = results_statsmodels.get_influence().resid_studentized_internal

model_norm_residuals_abs_sqrt = np.sqrt(np.abs(model_norm_residuals))

# Create the scale-location plot

sns.regplot(

x=fitted_values,

y=model_norm_residuals_abs_sqrt,

ci=None,

lowess=True)

plt.title("Scale-location plot")

plt.xlabel("Fitted values")

plt.ylabel(r"$\sqrt{|z_i|}$")

plt.show()

Diagnostic tests#

Statsmodels diagnostic table#

In addition to visual diagnostics, Statsmodels provides a convenient table in the summary output that includes several statistical tests. These tests can offer further insights into the validity of our model’s assumptions and help us identify potential issues.

# Get the diagnostic table

diag_table_statsmodels = results_statsmodels.summary().tables[2]

# Print the coefficients table

print(diag_table_statsmodels)

==============================================================================

Omnibus: 3.503 Durbin-Watson: 2.172

Prob(Omnibus): 0.173 Jarque-Bera (JB): 1.139

Skew: 0.023 Prob(JB): 0.566

Kurtosis: 1.550 Cond. No. 192.

==============================================================================

What does this table show:

‘Omnibus’: this test assesses the normality of the residuals. It combines measures of skewness and kurtosis to provide an overall test of whether the residuals deviate significantly from a normal distribution.

‘Prob(Omnibus)’: this is the P value associated with the Omnibus test. A low P value suggests that the residuals are not normally distributed, which could indicate a problem with the model’s assumptions.

‘Durbin-Watson’: this statistic tests for autocorrelation in the residuals. Autocorrelation occurs when the residuals are not independent of each other, which can violate the assumption of independence. A Durbin-Watson statistic around 2 suggests no autocorrelation, while values significantly less than 2 suggest positive autocorrelation.

‘Jarque-Bera (JB)’: this test also assesses the normality of the residuals by examining their skewness and kurtosis. A high p-value suggests that the residuals are normally distributed.

‘Prob(JB)’: this is the p-value associated with the Jarque-Bera test.

‘Skew’: this statistic measures the asymmetry of the distribution of the residuals. A skewness of 0 indicates a perfectly symmetrical distribution, while positive values indicate right skewness and negative values indicate left skewness.

‘Kurtosis’: this statistic measures the “peakedness” of the distribution of the residuals. A kurtosis of 3 indicates a normal distribution, while higher values indicate a more peaked distribution and lower values indicate a flatter distribution.

‘Cond. No.’: this is the condition number, which measures the sensitivity of the model to small changes in the data. A high condition number can indicate multicollinearity (high correlation between predictors), which is more relevant in multiple regression.

By examining these diagnostic tests, we can gain further insights into the adequacy of our model and potential areas for improvement. If any of these tests suggest significant deviations from the assumptions, we might need to consider transformations, different modeling approaches, or further investigation of the data.

Breusch-Pagan#

In addition to examining the scale-location plot, we can use the Breusch-Pagan test to formally test for heteroscedasticity. This statistical test assesses whether the variance of the errors is related to the predictor variables. While not directly shown in the statsmodels summary output, we can perform this test separately using the Statsmodels library.

The het_breuschpagan function returns a tuple containing the Lagrange multiplier test statistic, the P value associated with the test statistic, the F-statistic for the auxiliary regression, and the P value associated with the F-statistic.

from statsmodels.stats.diagnostic import het_breuschpagan

# Perform the Breusch-Pagan test

breusch_pagan_test = het_breuschpagan(

results_statsmodels.resid,

results_statsmodels.model.exog # Provides the design matrix (exogenous variables) used in the model

)

# Print the test results

print("Breusch-Pagan test results:", breusch_pagan_test)

print(f"P value: {breusch_pagan_test[-1]:.5f}")

Breusch-Pagan test results: (3.1971404515605957, 0.07376714520088247, 3.5875802150776823, 0.08479389740119875)

P value: 0.08479

The P value associated with the F-statistic is higher than 0.05, meaning we do not have enough evidence to reject the null hypothesis of homoscedasticity. In simpler terms, it suggests that the variability of the residuals is constant across the range of predictor values. This supports the assumption of homoscedasticity.

Exogeneity#

Recall our simple linear regression equation \(y = f(x) + \epsilon\). The exogeneity assumption states that the predictor variable (\(x\)) should not be correlated with the error term (\(\epsilon\)). In other words, the factors that influence the error term should be independent of the factors that influence the predictor variable.

Exogeneity is crucial because the residuals we analyze are estimates of the error term. If the predictor variable is correlated with the error term, the residuals will also exhibit a systematic relationship with the predictor, potentially leading to misleading patterns in the residual plots.

Unfortunately, there’s no single statistical test to definitively assess exogeneity. It often requires careful consideration of the relationship between the variables and potential sources of endogeneity (violation of exogeneity), such as omitted variables or measurement error.

However, by examining residual plots and considering the context of our analysis, we can gain some insights into the plausibility of the exogeneity assumption. If the residuals show a clear non-random pattern related to the predictor variable, it might suggest a violation of exogeneity.

Other diagnostic tools#

While we’ve focused on visual diagnostics and the Statsmodels diagnostic table in this chapter, other tools can provide further insights into our regression model.

Recall from the chapter on normality and outlier detection that leverage measures how far an observation’s predictor values are from the others, while influence measures how much an observation affects the regression results. One common measure of influence is Cook’s distance, which we explored in detail previously.

Statsmodels provides ways to calculate these measures, allowing us to identify influential data points that might warrant further investigation.

# Get the influence summary frame from the fitted model result object

influence_summary = results_statsmodels.get_influence().summary_frame()

# Print the first few rows

influence_summary.head()

| dfb_Intercept | dfb_per_C2022_fatacids | cooks_d | standard_resid | hat_diag | dffits_internal | student_resid | dffits | |

|---|---|---|---|---|---|---|---|---|

| 0 | 0.432524 | -0.399939 | 0.130740 | 1.036203 | 0.195836 | 0.511351 | 1.040043 | 0.513246 |

| 1 | 0.125778 | -0.114766 | 0.013442 | 0.369589 | 0.164447 | 0.163963 | 0.354598 | 0.157312 |

| 2 | -0.246360 | 0.224792 | 0.049815 | -0.711492 | 0.164447 | -0.315643 | -0.694551 | -0.308127 |

| 3 | -0.414219 | 0.376444 | 0.132773 | -1.192503 | 0.157350 | -0.515311 | -1.218495 | -0.526542 |

| 4 | 0.149938 | -0.136264 | 0.019598 | 0.458159 | 0.157350 | 0.197982 | 0.441066 | 0.190596 |

Consequences and remedies#

Why are these assumptions important and what we can do if they are violated?

Violating the assumptions of linear regression can have several consequences:

Biased coefficient estimates: if the linearity or exogeneity assumptions are violated, the estimated regression coefficients might be biased, meaning they don’t accurately reflect the true relationships between the variables.

Invalid inference: if the homoscedasticity or normality assumptions are violated, the standard errors, confidence intervals, and P values might be inaccurate, leading to incorrect conclusions about the statistical significance of the relationships.

Inefficient estimates: even if the coefficients are unbiased, violating assumptions can lead to less efficient estimates, meaning they have larger standard errors and are less precise.

Poor predictive accuracy: violations of assumptions can also affect the model’s ability to accurately predict new observations.

Fortunately, we have several tools to address violations of assumptions:

Transformations: transforming the predictor or response variable (e.g., taking the logarithm, square root, or reciprocal) can often help address non-linearity or heteroscedasticity.

Weighted least squares: if we detect heteroscedasticity, where the variability of the errors is not constant, we can use weighted least squares (WLS) regression. This method assigns different weights to the observations, giving more weight to those with smaller variances and less weight to those with larger variances. This can help to account for the unequal variances and improve the efficiency of the estimates. In WLS, the function we minimize, previously denoted as \(S(\boldsymbol{\beta})\), is modified to incorporate weights:

\[S(\boldsymbol{\beta}) = \sum_{i=1}^{n} w_i [y_i - f(\mathbf{x}_i, \boldsymbol{\beta})]^2\]where \(w_i\) represents the weight assigned to the \(i\)-th observation.

If, in addition to heteroscedasticity, there is also correlation between the error terms (autocorrelation), then generalized least squares (GLS) models can be used. These models are more complex but can account for both unequal variances and correlations in the errors.

Robust regression: if outliers are a concern, we can use robust regression techniques that are less sensitive to extreme values.

Non-linear models: if the relationship between the variables is clearly non-linear, we might need to consider non-linear regression models.

Understanding the consequences of violating assumptions and the available remedies empowers us to take appropriate action and ensure the validity and reliability of our linear regression analysis. By addressing potential issues, we can build more accurate and robust models that provide meaningful insights into the relationships between variables.

Statistical inference and uncertainty#

In the previous sections, we explored how to estimate the coefficients of a linear regression model and assess its overall fit. Now, we’ll delve into the realm of inference and uncertainty. This involves quantifying the uncertainty associated with our estimates and drawing conclusions about the population based on our sample data.

Standard error of the regression#

A key measure of uncertainty in linear regression is the standard error of the regression (SER), also known as the standard error of the estimate (\(S\)). SER represents the average distance that the observed values fall from the regression line:

where:

\(y_i\) is the observed value for the \(i\)-th observation

\(\hat{y}_i\) is the predicted value for the \(i\)-th observation

\(n\) is the number of observations

\(\text{RSS}\) is the residual sum of squares

Notice that we divide by \(n - 2\) in the formula, which is the degrees of freedom of the residuals. This is because we’ve estimated two parameters in the simple linear regression model: the intercept (\(\beta_0\)) and the slope (\(\beta_1\)). Estimating these parameters consumes two degrees of freedom from the data.

# Calculate S (SER)

ser = np.sqrt(rss / df_residuals)

# np.sqrt(results_statsmodels.mse_resid)

print(f"S = {ser:.4f}")

S = 75.8955

SER provides a valuable measure of the overall accuracy of our model. It tells us how much the observed values deviate from the regression line, on average, in the same units as the response variable.

Here’s why SER is important and how we can use it:

Assess the accuracy of the model: a lower SER generally indicates a better fit, where the observations are closer to the regression line. We can use SER to compare the accuracy of different models (we will discuss this topic in the next chapter about the comparison of models).

Visualize the uncertainty of the regression line: SER is a key component in calculating confidence bands around the regression line. These bands provide a visual representation of the uncertainty associated with the estimated relationship between the variables, as we will see later.

Quantify the uncertainty in our predictions: SER helps us understand how much our predictions might deviate from the actual values, on average. This is crucial for constructing prediction intervals, which we’ll discuss later in the chapter.

Identify potential outliers: we can use SER to calculate standardized residuals, which can help identify potential outliers.

Understand the overall fit: while R² provides a relative measure of the goodness of fit (the proportion of variance explained), SER provides an absolute measure of the variability around the regression line. Considering both metrics gives us a more complete picture of the model’s performance.

P values and confidence intervals#

The Statsmodels output provides standard errors, t-statistics, P values and confidence intervals for each coefficient in the model. These statistics help us quantify the uncertainty associated with the estimated relationships between the predictors and the response variable.

Remind that a confidence interval provides a range of plausible values for the true population value of a coefficient. For example, a 95% confidence interval for the slope tells us that we can be 95% confident that the true slope of the relationship between the predictor and the response falls within that interval.

A P value tests the null hypothesis that the true coefficient is zero. A low P value (typically below 0.05) suggests that the predictor variable has a statistically significant effect on the response variable, meaning that the observed relationship is unlikely to be due to chance alone.

These statistics are essential for drawing meaningful conclusions from our regression analysis. They help us understand not only the magnitude and direction of the relationships but also the level of confidence we can have in our estimates.

# Print the coefficients table

print(coef_table_statsmodels)

======================================================================================

coef std err t P>|t| [0.025 0.975]

--------------------------------------------------------------------------------------

Intercept -486.5420 193.716 -2.512 0.029 -912.908 -60.176

per_C2022_fatacids 37.2077 9.296 4.003 0.002 16.748 57.668

======================================================================================

T-tests for the coefficients#

Just as we used a t-test to assess the significance of the correlation coefficient, we can also use a t-test to evaluate the significance of each coefficient in our linear regression model. The formula for the t-statistic is similar, involving the ratio of the estimated coefficient to its standard error. However, in the context of regression, the t-test helps us determine whether a predictor variable has a statistically significant effect on the response variable:

where \(t\) is the t-statistic, \(\hat{\beta}_j\) is the estimated coefficient for the \(j\)-th predictor variable, and \(s_{\hat{\beta}_j}\) is the standard error of the estimated coefficient.

The standard error of a coefficient measures the variability or uncertainty associated with the estimated coefficient. It can be calculated using the following general formula:

where \(s^2\) is the estimated variance of the error term, i.e., the square of SER (\(S^2\)), and \([(\mathbf{X}^T \mathbf{X})^{-1}]_{j,j}\) is the \(j\)-th diagonal element of the inverted \(\mathbf{X}^T \mathbf{X}\) matrix, which represents the variance of the \(j\)-th coefficient estimate.

This leads to the following formula:

While we won’t delve into the calculation of these standard errors, it’s important to understand that they are derived from the overall variability of the data and the specific structure of the model. Fortunately, Statsmodels provides the standard errors directly in its output, so we can readily access them without performing the calculations ourselves.

# Extract the coefficients and standard errors from the statsmodels table

intercept_coef = results_statsmodels.params["Intercept"]

intercept_stderr = results_statsmodels.bse["Intercept"] # bse contains the standard errors

slope_coef = results_statsmodels.params["per_C2022_fatacids"]

slope_stderr = results_statsmodels.bse["per_C2022_fatacids"]

# Calculate the t-ratios

t_intercept = intercept_coef / intercept_stderr

t_slope = slope_coef / slope_stderr

# Print the t-ratios

print(f"t-ratio for Intercept: {t_intercept:.3f}")

print(f"t-ratio for Slope: {t_slope:.3f}")

t-ratio for Intercept: -2.512

t-ratio for Slope: 4.003

With the t-statistics and degrees of freedom in hand, we can now determine the P value for the correlation. We’ll use the cumulative distribution function (CDF) of the t-distribution to calculate this P value.

from scipy.stats import t as t_dist

# Calculate the P values (two-sided test)

intercept_pvalue = 2 * (1 - t_dist.cdf(abs(t_intercept), df_residuals))

slope_pvalue = 2 * (1 - t_dist.cdf(abs(t_slope), df_residuals))

# Print the P values

print(f"P value for Intercept: {intercept_pvalue:.5f}")

print(f"P value for Slope: {slope_pvalue:.5f}")

P value for Intercept: 0.02890

P value for Slope: 0.00208

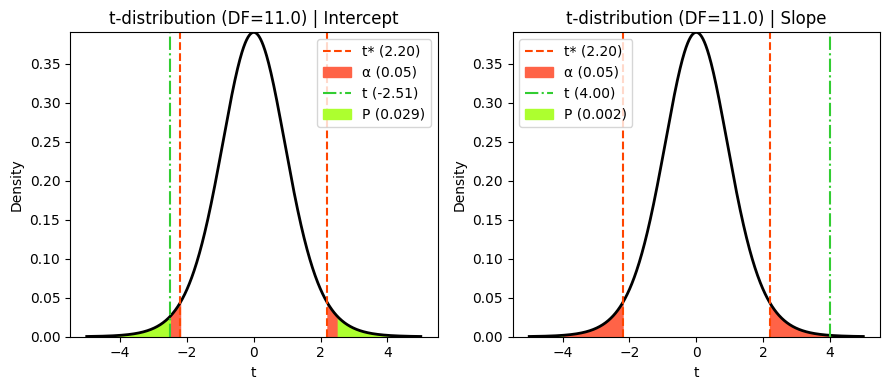

Visualizing t, critical and P values#

As we’ve done in previous chapters, we can visualize how the P value and critical values are determined using the t-statistic and the t-distribution. This visualization helps us understand the relationship between the calculated t-statistic, the degrees of freedom, and the corresponding P value, providing a clearer picture of the hypothesis testing process.

Notice that the critical t-value (t*) is the same for both coefficients. This is because in simple linear regression, both coefficients share the same degrees of freedom (n - 2), which determine the shape of the t-distribution.

# Extract the t-values for the coefficients

intercept_tvalue = results_statsmodels.tvalues['Intercept'] # Extract from the table

slope_tvalue = results_statsmodels.tvalues["per_C2022_fatacids"]

# Significance level (alpha)

α = 0.05

# Calculate critical t-values (two-tailed test)

t_crit = t_dist.ppf(1 - α/2, df_residuals)

# Generate x values for plotting

x_t = np.linspace(-5, 5, 1000)

hx_t = t_dist.pdf(x_t, df_residuals)

# Create subplots

fig, axes = plt.subplots(1, 2, figsize=(9, 4))

# Plot for Intercept

axes[0].plot(

x_t,

hx_t,

lw=2,

color='black')

# Critical value

axes[0].axvline(

x=-t_crit,

color='orangered',

linestyle='--')

axes[0].axvline(

x=t_crit,

color='orangered',

linestyle='--',

label=f"t* ({t_crit:.2f})")

# Alpha area

axes[0].fill_between(

x_t[x_t <= -t_crit],

hx_t[x_t <= -t_crit],

color='tomato',

label=f"α ({α})")

axes[0].fill_between(

x_t[x_t >= t_crit],

hx_t[x_t >= t_crit],

color='tomato')

# t-statistic

axes[0].axvline(

x=intercept_tvalue,

color='limegreen',

linestyle='-.',

label=f"t ({intercept_tvalue:.2f})")

# P value area

axes[0].fill_between(

x_t[x_t <= -abs(t_intercept)],

hx_t[x_t <= -abs(t_intercept)],

color='greenyellow',

label=f"P ({intercept_pvalue:.3f})")

axes[0].fill_between(

x_t[x_t >= abs(t_intercept)],

hx_t[x_t >= abs(t_intercept)],

color='greenyellow')

axes[0].set_xlabel("t")

axes[0].set_ylabel('Density')

axes[0].set_title(

f"t-distribution (DF={df_residuals}) | Intercept")

axes[0].margins(x=0.05, y=0)

axes[0].legend()

# Plot for Slope

axes[1].plot(x_t, hx_t, lw=2, color='black')

axes[1].axvline(

x=-t_crit, color='orangered', linestyle='--')

axes[1].axvline(

x=t_crit, color='orangered', linestyle='--',

label=f"t* ({t_crit:.2f})")

axes[1].fill_between(

x_t[x_t <= -t_crit], hx_t[x_t <= -t_crit], color='tomato',

label=f"α ({α})")

axes[1].fill_between(

x_t[x_t >= t_crit], hx_t[x_t >= t_crit], color='tomato')

axes[1].axvline(

x=slope_tvalue, color='limegreen', linestyle='-.',

label=f"t ({slope_tvalue:.2f})")

axes[1].fill_between(

x_t[x_t <= -abs(t_slope)], hx_t[x_t <= -abs(t_slope)], color='greenyellow',

label=f"P ({slope_pvalue:.3f})")

axes[1].fill_between(

x_t[x_t >= abs(t_slope)], hx_t[x_t >= abs(t_slope)], color='greenyellow')

axes[1].set_xlabel("t")

axes[1].set_ylabel('Density')

axes[1].set_title(f"t-distribution (DF={df_residuals}) | Slope")

axes[1].legend()

axes[1].margins(x=0.05, y=0)

plt.tight_layout();

Confidence interval#

To calculate the confidence interval, we need to consider the variability of the estimated coefficient, which is captured by its standard error. Here’s how it’s done:

Calculate the point estimate: this is the value of the estimated coefficient \(\hat{\beta}_j\) obtained from the regression analysis.

Calculate the standard error: the standard error of the coefficient \(s_{\hat \beta_j}\) is a measure of its variability.

Determine the degrees of freedom: the degrees of freedom for the t-distribution used in constructing the confidence interval are \(n - 2\) in simple linear regression, where \(n\) is the number of observations.

Find the critical t-value: using the desired confidence level (e.g., 95%) and the calculated degrees of freedom, we can find the corresponding critical t-value (\(t^\ast\)) from the t-distribution table or using statistical software.

Calculate the margin of error: multiply the standard error by the critical t-value: \(W_j = t^\ast \times s_{\hat \beta_j}\).

Construct the confidence interval: subtract and add the margin of error to the point estimate to obtain the lower and upper bounds of the confidence interval: \(\mathrm{CI}_{\beta_j} = \hat{\beta}_j \pm W_j\)

# Calculate the confidence interval (e.g., 95% confidence)

confidence_level = 0.95

margin_of_error_intercept = t_crit * intercept_stderr

ci_intercept = (

intercept_coef - margin_of_error_intercept,

intercept_coef + margin_of_error_intercept)

margin_of_error_slope = t_crit * slope_stderr

ci_slope = (

slope_coef - margin_of_error_slope,

slope_coef + margin_of_error_slope)

# Print the results

print(f"95% confidence interval for the Intercept: \

[{ci_intercept[0]:.3f}, {ci_intercept[1]:.3f}]")

print(f"95% confidence interval for the Slope: \

[{ci_slope[0]:.3f}, {ci_slope[1]:.3f}]")

95% confidence interval for the Intercept: [-912.908, -60.176]

95% confidence interval for the Slope: [16.748, 57.668]

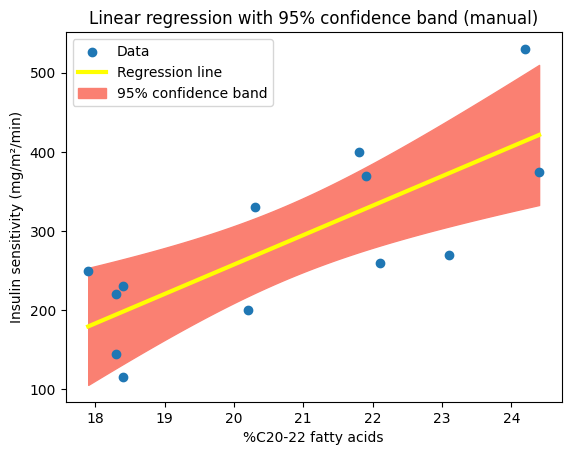

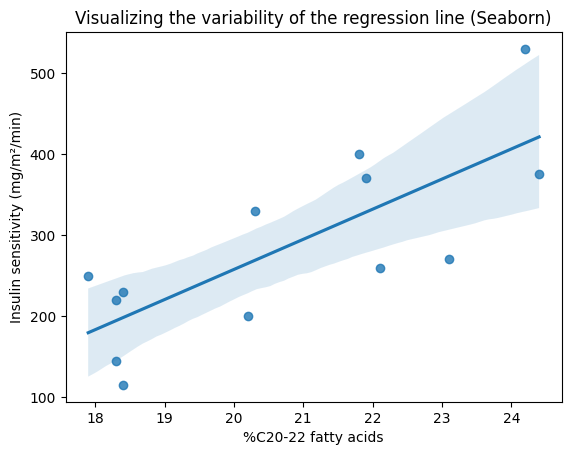

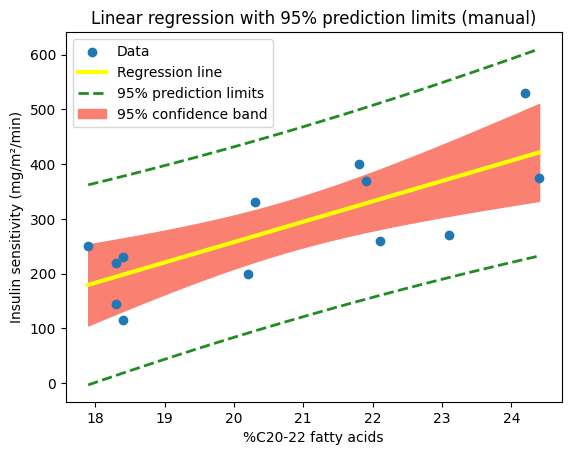

Visualizing the confidence band#

Recall that the SER (S) measures the overall variability of the observed values around the regression line. We can use this measure, together with the critical t-value for the desired confidence level, e.g., 95%, to construct a confidence band around the line. The plot_ci_manual() function calculates the margin of error for a confidence interval around the predicted mean response at a specific value of the predictor variable \(x_0\), as we predict \(\hat y_0 = \hat \beta_0 + \hat \beta_1 x_0\). The following formula is derived from the standard error of the predicted mean, taking into account both the uncertainty in the estimated coefficients and the variability of the data around the regression line:

As we can see, a larger SER will result in a wider confidence band, reflecting greater uncertainty in the estimated relationship.

To further illustrate the concept of confidence intervals and their role in quantifying uncertainty, let’s visualize the confidence band around our regression line. This visualization will provide a clear and intuitive representation of the range of plausible values for the true relationship between the variables.

We can define a plot_ci_manual() function to calculate and plot the 95% confidence (‘ci’) band around the regression line obtained using np.polyfit().

def plot_ci_manual(t, ser, n, x, x0, y0, ax=None):

"""Return an axes of confidence bands using a simple approach."""

if ax is None:

ax = plt.gca()

ci = t * ser * np.sqrt(1/n + (x0 - np.mean(x))**2 / np.sum((x - np.mean(x))**2))

ax.fill_between(x0, y0 + ci, y0 - ci, color='salmon', zorder=0)

return ax

import matplotlib.patches as mpatches

# Plot the data and the fitted line

plt.scatter(X, y, label="Data")

plt.plot(

X,

slope_numpy * X + intercept_numpy,

color="yellow", lw=3,

label="Regression line")

# Calculate and plot the CIs using the previous values for t*, S, n and X

x0 = np.linspace(np.min(X), np.max(X), 100)

y0 = slope_numpy * x0 + intercept_numpy # Calculate predicted values for x0

plot_ci_manual(t_crit, ser, n, X, x0, y0) # Plot CI on the current axes

# Create a custom legend handle for the confidence band

confidence_band_patch = mpatches.Patch(color='salmon', label='95% confidence band')

plt.xlabel("%C20-22 fatty acids")

plt.ylabel("Insulin sensitivity (mg/m²/min)")

plt.title("Linear regression with 95% confidence band (manual)")

# Get the handles and labels from the automatically generated legend

handles, labels = plt.gca().get_legend_handles_labels()

# Add the confidence band patch to the handles list

handles.append(confidence_band_patch)

# Add the legend to the plot, including all labels

plt.legend(handles=handles);

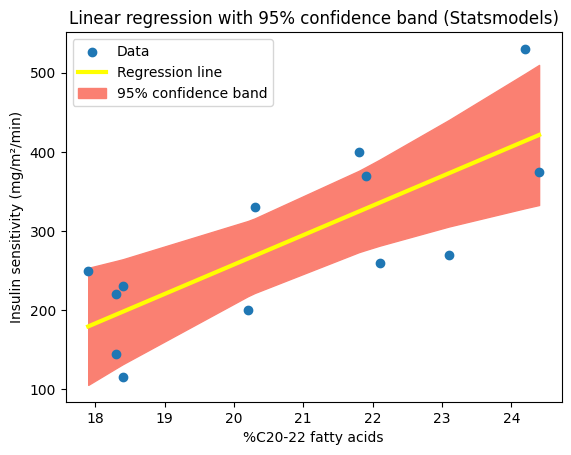

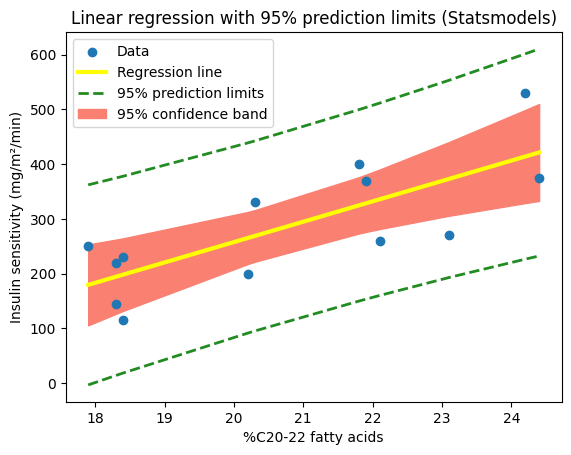

We can achieve a similar visualization using our fitted model and its get_prediction method. By leveraging its built-in capabilities, we can generate the fitted values and confidence intervals directly, using the ‘mean_ci_lower’ and ‘mean_ci_upper’ values from the prediction results. This streamlined approach eliminates the need for a custom function and showcases the convenience of Statsmodels for visualizing uncertainty in regression analysis. However, the resulting confidence band might appear less smooth compared to the one generated with a denser set of points from np.linspace, because it uses only the x-values from the original dataset.

# Plot the data and the fitted line (Statsmodels)

plt.scatter(X, y, label="Data")

plt.plot(

X,

results_statsmodels.fittedvalues,

color="yellow",

lw=3,

label="Regression line")

# Extract confidence intervals from Statsmodels predictions

predictions = results_statsmodels.get_prediction(X)

prediction_summary_frame = predictions.summary_frame(alpha=0.05)

# Plot the confidence intervals

plt.fill_between(

x=X,

y1=prediction_summary_frame['mean_ci_lower'],

y2=prediction_summary_frame['mean_ci_upper'],

color='salmon',

zorder=0)

# Create a custom legend handle for the confidence band

confidence_band_patch = mpatches.Patch(

color='salmon', label='95% confidence band')

plt.xlabel("%C20-22 fatty acids")

plt.ylabel("Insulin sensitivity (mg/m²/min)")

plt.title("Linear regression with 95% confidence band (Statsmodels)")

# Get the handles and labels from the automatically generated legend

handles, labels = plt.gca().get_legend_handles_labels()

# Add the confidence band patch to the handles list

handles.append(confidence_band_patch)

# Add the legend to the plot, including all labels

plt.legend(handles=handles);

Bootstrapping in linear regression#

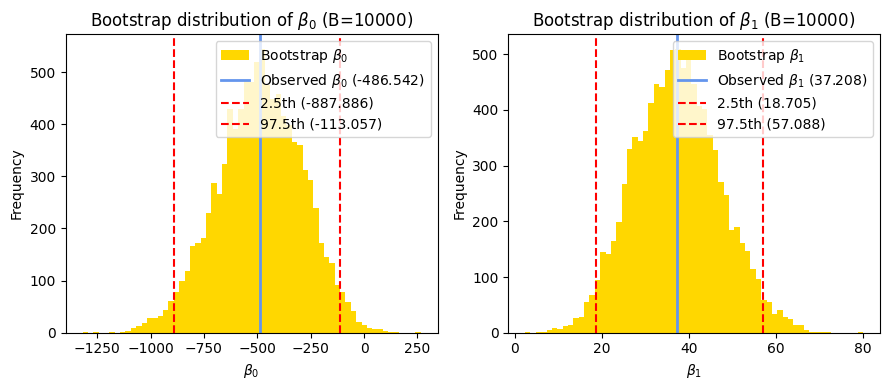

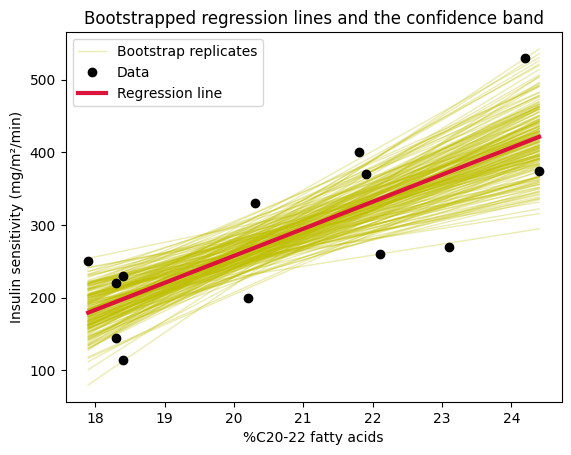

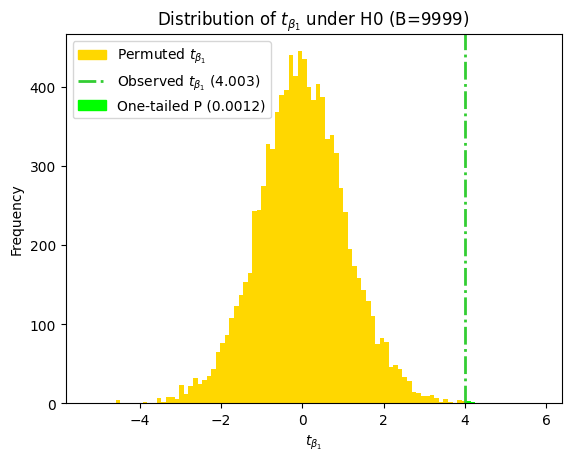

As we saw in the correlation chapter, bootstrapping is a powerful technique for statistical inference, especially when dealing with small sample sizes or when the assumptions of traditional methods might not be fully met. It allows us to estimate parameters and confidence intervals directly from the data without relying on strong distributional assumptions.

In the context of linear regression, we can use bootstrapping to: